Right now, at this moment, are you prepared to act on your company’s data? If not, why? At Ascend, we aim to make the abstract, actionable. So when we talk about making data usable, we’re having a conversation about data integrity.

Data integrity is the overall readiness to make confident business decisions with trustworthy data, repeatedly and consistently. Data integrity is vital to every company’s survival and growth. Yet it’s still a largely unfulfilled concept in many organizations.

Why is data integrity so important, and what concrete steps can you take to deliver trustworthy data? We have surveyed the landscape of opinions, advice, and technology dedicated to the topic. From this research, we developed a framework with a sequence of stages to implement data integrity quickly and measurably via data pipelines. Let’s explore!

Table of Contents

Why does data integrity matter?

At every level of a business, individuals must trust the data, so they can confidently make timely decisions.

Data is becoming increasingly ubiquitous and interwoven across all areas of business:

- With the emergence of data mesh strategies, data is being broken out of silos, facilitating closer linkages between operations and decision-making.

- Data products are reused across teams, often feeding multiple analytics, models, and decisions.

- Data is also increasingly relied upon to pinpoint problems in business, systems, products, and infrastructure.

Integrity of these data-driven diagnostics is vital as they are used to justify costly remediation of business problems. No one wants to be caught chasing ghosts.

For instance, when a company has flaws in its operational business data, and that feeds into financial reports and forecasts, the integrity of the entire data value chain is compromised, resulting in errors in how the company is run.

This means that data integrity is critical, whether you’re a key decision-maker at the highest level using KPIs, dashboards, or reports, or you’re using data to identify opportunities and threats. You need to be able to trust your data and make decisions with confidence, knowing the rest of the company is on the same page.

Furthermore, as decision-making becomes increasingly supported by machine learning and AI, the integrity of data becomes even more important. Your models need to be based on good training data, and the model inferences must be driven by good operational data, so that they correctly represent the reality that the business is forecasting.

What about data quality versus data integrity?

You might be surprised that we keep referencing data integrity and not data quality, so let’s talk about why we don’t use these terms interchangeably.

The recent emergence of data quality as a field of interest has attracted a wide array of specific vendors, methods, opinions, and points of view. The broad objective is to improve data integrity and make data trustworthy and actionable for data-driven decisions. But the variety of opinions, inconsistent methods, and diversity of functionality of different tools has muddied the waters. There is no consensus about what data quality is, how to measure it, and how to even approach implementing it.

After reviewing scores of articles and talking with several vendors (to start, take a look here, here, and here), we realized that many opinions about data quality are quite on point and could fit into a concrete and tangible description of data integrity. So what is missing? A broader framework that organizes these ideas into actionable sequences of steps. By actionable, we mean steps that you can implement, measure, diagnose, and remediate.

So can data integrity be organized into something you can execute on and deliver? Let’s get into it!

The Actionable Sequence for Data Integrity

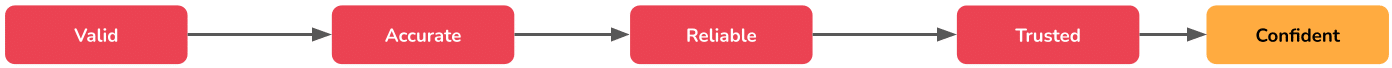

With the opinions of data quality experts in hand, we organized many of their recommendations into four distinct stages, so that you can implement them in your own business. Note that these stages don’t exist independently of each other. Rather, as we describe them, we point out how they build on top of each other:

First validating data, then assuring data accuracy, then making data reliable, and finally making it trustworthy.

As you research these concepts, you will find that the opinions of vendors and consultants diverge widely to benefit the solution they advocate for. In fact, these terms have become so muddied that they often seem synonymous. However, since our goal here is to narrow them into actionable stages, we tack in the other direction and define the four stages along lines of consensus where many practitioners agree.

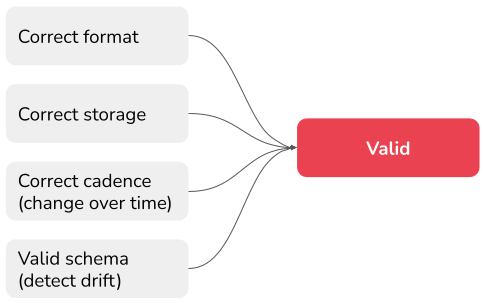

Stage 1: Validate Your Data

In this framework, validation is a series of operations that can be performed as data is drawn from its source systems. They are foundational, and without them, the subsequent stages will fail. These operations should ensure that your data is:

In the correct format. Foundational encoding, whether it is ASCII or another byte-level code, is delimited correctly into fields or columns and packaged correctly into JSON, parquet, or other file system.

In a valid schema. Field and column names, data types, and variations in delimiters that designate fields. It should detect “schema drift,” and may involve operations that validate datasets against source system metadata, for example.

In the correct storage. Coming from and being written to the correct physical file system location, database tables and columns, or other types of data storage systems.

Arriving in the correct cadence. This includes various intervals by which datasets arrive and are processed, such as change data capture, query, blob scan, streaming, etc. These intervals also change cadence over time.

We have found in practice that these foundational and quite technical operations are often not simple, and are best addressed in concert. They are also prerequisites for all the other operations that are often conflated with it in other literature, where the concept of “validated data” is usually expanded far beyond these basics.

Once these operations are complete, the data is ready for the next stage.

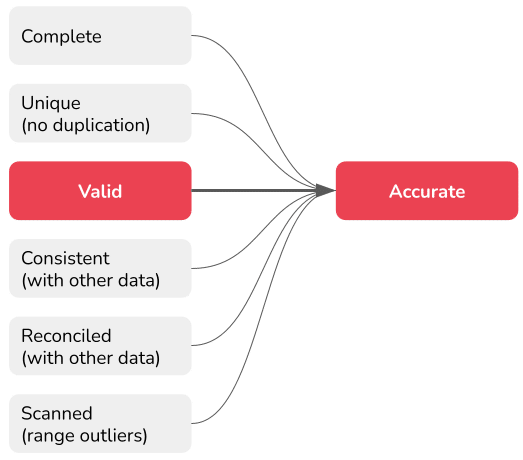

Stage 2: Assure the Accuracy of Your Data

Now, we can move on to operations that compare data to ensure its accuracy. These operations look at whether data is:

Complete. Look for missing fields and attributes, and make sure that all records are in the datasets to be processed.

Unique. Data sets should have no redundancy of records. Apply clear deduplication rules for each specific data set. If redundant records indicate deeper problems in the source dataset, generate clear diagnostic notifications.

Consistent. Identify specifically data values that do not match required specifications, such as precision in decimals or matching a predetermined set of allowable values. This prepares the data for comparisons that apply in the next stage.

Reconciled. Rooting out this type of inconsistency involves comparison with other datasets, so they can be merged and joined in Stage 4. You could filter for keys in transactional tables that don’t appear in a master table, for example, or apply partitioning strategies that affect later merge operations.

Scanned. Here we focus on data range outliers. These operations can be performed with simple filters, and are sometimes used to identify parameters used elsewhere in the data flow. For example, a data scan may determine the 3-sigma range of the distribution (the upper and lower limits containing 99.7% of the value).

Typically, data accuracy operations can be applied immediately after the dataset has passed the validation stage. They can include corrective action that “fix” the data or “filter” it from further processing.

Several master data management techniques could be applicable in this stage. For example, using data warehousing terminology, duplicate dimensions are often associated with bifurcation in fact tables, as transaction records are linked to each of the duplicate dimensions. A deduplication operation needs to reconcile both the dimensional and associated transaction records.

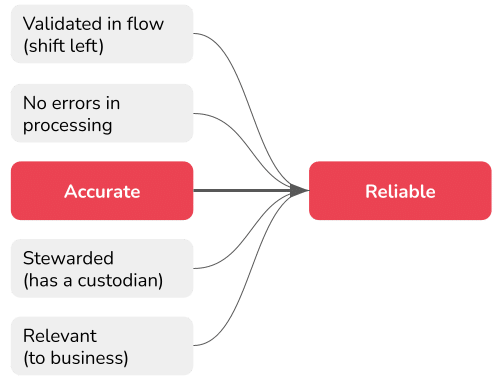

Stage 3: Make Your Data Reliable

The first two stages are more technical in nature and don’t require much business context to implement. For that reason, you should apply the operations that validate, assure accuracy, and make data reliable as early as possible in the data life cycle and, ideally, entirely automate them. This idea of performing these operations as early as possible is colloquially known as “shifting left,” referring to the common representation of data flowing from left to right in visualizations.

In Phase 3, we continue this practice of “shifting left”. We elevate data from “accurate” and maybe not yet so useful to the business to “reliable” data products that directly address business questions. This is achieved by applying business-driven validations and transformations. The goal is for business stakeholders to be ready to take action with it.

These processing steps require significant business interactions to define, and sometimes many sequential operations to implement. In practice, they are best defined with the use of a highly visual, interactive UI clearly describing the order of operations. The outcome is reliable data that is:

Relevant. The data product is designed to drive the decision-making process, and includes all of the information required, regardless of whether it feeds reports, dashboards, or ML models.

Processed without errors. The platform performing the operations should include self-monitoring features that detect, and where possible auto-correct, for many types of processing errors. For example, database queries / jobs often fail, leaving residual data that pollutes datasets and requires cleanup before you can rerun the query. This step includes the logging and alerting of errors that help focus the engineers who are intervening to resolve the problem. “Processed without errors” means that engineering intervention is completed and no remaining errors languish unaddressed.

Have a data steward. Reliable data requires business stakeholders who stand behind it and provide guarantees to any downstream data users. This is a non-technical yet critical dimension to creating trust in the data, and you should establish it as part of this stage. We recommend against paradigms that call for a data steward to take responsibility only after the data engineering is complete.

Placing these steps at the end of data engineering processes is a common mistake. This results in many wasteful iterations, as business users reject data, or worse, make decisions with bad data that looked good to the engineers. Data stewards may even apply early assessments with the community of users who will rely on the data to reveal problem areas. “Shifting left” such assessments, while Phase 4 is still in progress, can accelerate the data life cycle significantly,

Stage 4: Make Your Data Trustworthy

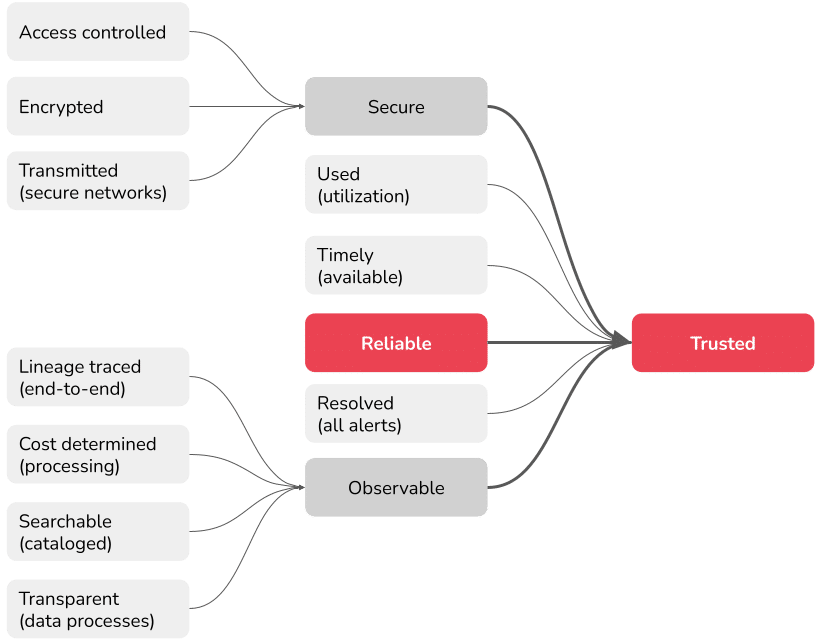

This stage addresses several aspects that elevate data from being reliable and useful to actually be trusted for day-to-day operational decision making. This means addressing two systemic areas that fall on data and infrastructure engineers to implement, after which the data is:

Secure: The company has control over who can access data where in its life cycle and what those users and systems can do; when and how the data is encrypted along its life cycle; and how data is transmitted between platforms and systems, including over secure networks.

Observable: All platforms that touch data should be able to monitor and track all operations through the full data cycle. The lineage must be traced end to end, and the interim and final data products should be cataloged and searchable. All the data processes should be logged and transparent to properly authorized users, and should report the costs incurred to process and manage them.

A holistic approach to data trustworthiness includes both human processes and technology. Wherever possible, you should automate processes for tracking, auditing, and detecting problems with data. Furthermore, in order for data to be trustworthy, our experience in operating data platforms has identified three more aspects to test and see if data is:

Timely. “Shifting left” and applying any processing as early as possible in the life cycle helps data products be ready to use as quickly as possible. In addition, processing data incrementally as soon as it arrives means there is less waiting for large batch runs to complete.

Used by the business. Tracking how and when data is used for reports, dashboards, ML models etc. provides important insight into the relative importance of data products. It also means detecting when data products fall into disuse, so they can be deprecated and no longer incur processing costs. Conserving resources and demonstrating savings also helps increase trust among data teams and the rest of the business.

Resolved. The platforms and systems that create trustworthy data should be instrumented to raise notifications and alerts about their operations. These messages should be analyzed and resolved in their own right, to make sure problems don’t creep into any point in the life cycle over time.

The Result: Making Confident, Data-Driven Decisions

Approaching data integrity with this staged, actionable approach provides a rich set of “hooks” and checkpoints that can be instrumented and measured. This means you can determine a set of metrics that quantify data “health” depending on the complexity of your specific data environment.

With metrics in place at each stage, you can indicate the trustworthiness of your data, and partner better with business users around how to use data to improve your company’s operations. Understanding the sources of risk and flaws gives the business the ability to make decisions in imperfect data environments, support compliance objectives, and improve innovation to focus on high-impact areas.

Ultimately, when you have valid, accurate, reliable, and trustworthy data, accompanied by specific indicators of what kind of flaws the data has, you’re in a solid position to use it to make intelligent, informed, and confident decisions in your business.

Why are data pipelines critical to implement real data integrity?

Now that we have a concrete set of stages to drive and improve data integrity, how do you implement a life cycle that automates and delivers trustworthy data?

For starters, we must acknowledge that to make your data usable, you have to process it. One could say that the only really trustworthy data is raw data directly in source systems. Proponents of this approach often simply replicate raw system data into a data lake or data warehouse, and designate it as pure, unaltered, and of the highest achievable quality.

In fact, this approach simply pushes all the aspects of data integrity into the hands of the downstream report writers, dashboard coders, and ML engineers. In this approach, validating data, assuring data accuracy, making it reliable, and assuring trustworthiness happens in bespoke code and black boxes. It’s the ultimate “shift right” strategy, and it rarely works in enterprises.

In reality, to process data through transparent measurable stages that result in trustworthy data requires a data life cycle. Data pipelines are integral to implementing life cycles because they are inherently sequential operations that deliver actionable steps in measurable stages backed by high levels of automation.

Data pipelines implement data life cycles with a minimum of custom code, which introduces bugs and errors. Using the native capabilities of a pipeline platform that packs plenty of automation is a perfect match to deliver on a data integrity strategy. For capabilities that are not native to the platform, the data pipeline construct is still invaluable to orchestrate the non-native libraries and tools that perform specific operations — like detecting statistical outliers in datasets.

Data Pipelines Are the Way to Ensure Data Integrity

It has become a standard trope that data quality is vital to be able to make confident, data-driven business decisions. But an actionable framework has been missing to organize the methods and strategies into measurable stages to deliver data integrity quickly and at scale.

Today, the emergence of data pipeline automation closes the final gap, bringing to the table the rigor to deliver this data integrity framework at scale while avoiding complexity and unnecessary costs. With complete data pipeline automation platforms now just a mouseclick away, data pipelines have become the natural framework to operationalize data integrity, and simplify and accelerate your data integrity project.

That is the magic of data pipelines.

Additional Reading and Resources