Introducing the Autonomous Dataflow Service!

This week, the team at Ascend is launching our Autonomous Dataflow Service, which enables data engineers to build, scale, and operate continuously optimized, Apache Spark-based pipelines.

How We’re Helping Drive Efficiency & Reduce Spend

As we all navigate these uncertain times, one thing has never been more certain — the need to reduce spend. Dollars saved directly equates to employees kept, strategic investments continued, and savings passed on to customers. Finding unnecessary spend and inefficiencies across the business is more important than ever before.

Bloor Group Webinar | Beyond Pipelines – The Power of Data Orchestration

The combination of artificial intelligence and data pipelines opens remarkable new opportunities for data orchestration. The end result is a dynamic, automated paradigm for data movement, one that optimizes […]

Ascend Declarative Pipeline Workflows Address the Challenges Facing DataOps Today

At Ascend, we are excited to introduce a new paradigm for managing the development lifecycle of data products — Declarative Pipeline Workflows. Keying off the movement toward declarative descriptions in the DevOps community, and leveraging Ascend’s Dataflow Control Plane, Declarative Pipeline Workflows are a powerful tool that allows data engineers to develop, test, and productionize data pipelines with an agility and stability that has so far been lacking in the DataOps world.

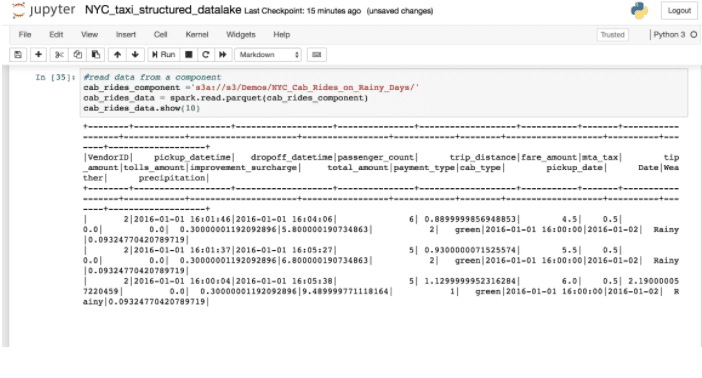

Building the Ascend Structured Data Lake

We run everything on Kubernetes and manage system state in MySQL plus a large scale blob store (Google Storage/S3/Azure Blob Storage).