Continuous Integration and Continuous Delivery (CI/CD) has transformed software development by enabling faster, safer deployments – and data teams are now realizing these same benefits must extend to data pipelines and analytics code. But applying CI/CD in a data context comes with unique challenges.

In this guide, we’ll explore general CI/CD principles and dive into data-specific hurdles, with practical best practices to help data team foster a robust, tool-agnostic CI/CD process.

Why? Because the reliability and agility of your data pipelines can make or break decision-making. Adopting CI/CD methodologies for data isn’t just nice to have – it’s pivotal for ensuring high-quality, timely data in production. Let’s break down how to do it right.

What is CI/CD? (A Quick Primer)

Before diving into specifics for data teams, let’s clarify CI/CD in general terms:

- Continuous Integration (CI): A practice where developers frequently merge code changes into a central repository, triggering automated builds and tests. The goal is to catch integration issues early by regularly validating code with unit and integration tests. In other words, every code commit is automatically verified so that issues surface fast rather than months later. This keeps the codebase healthy and team members in sync.

- Continuous Delivery/Deployment (CD): A practice that automates releasing validated code to production (or production-like) environments. After CI has ensured code changes pass all tests, CD takes over to push those changes through staging and into production in an automated fashion. Only code that meets quality checks makes it to deployment, often with the click of a button or automatically. The core idea is reducing manual effort and risk in releases.

In summary: CI/CD forms a pipeline where code is integrated, tested, and deployed continuously. This results in quicker iterations and more reliable software delivery. Data teams can reap these same rewards – but need to adapt the principles to the data world.

Why Data Teams Need CI/CD (Beyond Software DevOps)

Data engineering has traditionally lagged behind software development in automation practices. But the gap is closing. Forward-thinking data organizations (inspired by the DevOps movement) are adopting DataOps. Just as DevOps revolutionized app development, DataOps and CI/CD can revolutionize data pipeline development by bringing speed and trust to data delivery.

Key reasons CI/CD is critical for data teams:

- Reliability of Data Pipelines: Data pipelines often feed dashboards, machine learning models, or reports. A broken pipeline or bad data can cascade into poor decisions. CI/CD helps catch issues (bugs in transformation code, schema mismatches, etc.) early in the development cycle, rather than after deployment. This means fewer midnight firefights due to broken ETL jobs.

- Faster Development Cycles: Modern businesses demand quick delivery of new data products and insights. CI/CD allows data teams to make small, incremental changes to pipelines or SQL models and release them frequently rather than in big, risky batches.

- Consistency Across Environments: Data teams often juggle development, staging, and production environments for databases, pipelines, and analytics. Without automation, it’s easy for these environments to drift (leading to “works on dev but breaks in prod” scenarios). CI/CD enforces environment setup and deployments via code, ensuring that changes (like a new table or updated DAG) are applied consistently across environments.

- Team Collaboration and Confidence: A CI/CD process acts as a safety net. Data engineers can merge changes with confidence knowing tests and validations will run. This encourages collaboration and iterative improvements instead of siloed work. It also fosters a culture of accountability – if a data transformation fails a test, the team knows immediately and can fix it before it affects others.

In short, data teams need CI/CD for the same reasons software teams do: to deliver faster, better, more reliable outcomes. However, applying CI/CD to data projects isn’t a copy-paste of software CI/CD. There are unique challenges to address.

Unique Challenges of CI/CD for Data Pipelines

Implementing CI/CD in data environments introduces distinct complexities that software teams may not face. Being aware of these pain points will help you design a workflow that truly fits your data team’s needs. Here are some common challenges and how to think about them:

- Handling Large Data & Long-Running Jobs: Unlike a small web app, data pipelines might process millions of records or perform heavy computations. Tests and deployment processes must accommodate big data volumes. Running an entire pipeline on massive prod datasets for every code change isn’t feasible.

Tip: Use data stubs or sampled datasets for CI tests to keep things fast, and have integration tests that run on a subset representative of production scale.

- Schema Changes and Data Drift: In data projects, the “code” is not the only thing evolving – database schemas and reference data evolve too. Managing schema changes across development, staging, production is tricky. A simple column rename can break downstream ETL tasks or dashboards. Traditional CI pipelines don’t handle database migrations out-of-the-box.

Solution: Treat schema migrations as part of your deployment (e.g., with versioned migration scripts), and include checks for schema consistency. You may also want to consider using an ETL tool with automated solutions for schema drift, like Ascend.

- Testing Data Quality, Not Just Code: Software tests usually assert functionality (input X yields output Y). Data pipeline tests must also validate data quality – e.g., did the daily job produce the expected row count? Are there nulls in critical fields?

Best practice: Incorporate data quality gates in your data pipelines (with tools like Ascend you can ensure data quality and integrity from ingestion through delivery).

- Diverse Toolchain Integration: Data stacks are often heterogeneous – your pipeline might involve Python scripts, SQL queries, Spark jobs, BI tool extracts, etc., each with its own tooling. Ensuring your CI/CD pipeline can interface with all the necessary components (e.g., running a dbt test, deploying an Airflow DAG, updating a Spark job) is a challenge.

Keep it simple: If your data workflow spans cloud functions, databases, and more, you might need custom scripts or adapters in your pipeline. Aim for a unified process despite the tool diversity. Consider simplifying your data stack with a single, end-to-end solution that empowers you to execute your scripts and queries from a unified platform. With tools like Ascend, you can build and deploy CI/CD pipelines without integrating multiple tools making your pipelines and processes more agile and maintainable.

- Data Sensitivity and Compliance: Data often includes sensitive information. Copying production data into a test environment for CI can raise compliance issues or risks.

Mitigation: Use anonymized or synthetic data in CI tests, or leverage data masking techniques when replicating production data. Additionally, ensure your CI/CD pipeline has proper access controls – the pipeline will likely need credentials to run SQL or access storage; manage those secrets securely (e.g., vaults, not in plain text).

- Environment Parity: Many data teams struggle with inconsistent development/testing environments. Perhaps the dev environment is a small warehouse with different settings, or uses stubbed external sources that behave differently than real ones. For data teams, environment parity means your transformations, libraries, and even data schemas should mirror production as closely as possible in test environments. Investing in a realistic staging setup (with smaller but structurally similar data) pays dividends.

- Cultural Shift and Skills: On the human side, data engineers may not be as familiar with CI/CD tools and DevOps practices. There can be a learning curve and an initial slowdown as the team builds expertise. Also, data analysts or scientists producing SQL or notebooks might not be used to version control and automated tests. Overcoming this requires training and cultural buy-in.

Advice: Start small and celebrate wins. Perhaps begin by version-controlling your ETL scripts and adding a couple of simple tests in a CI pipeline. Demonstrate how catching a bug early saved a lot of trouble – this builds momentum and support for CI/CD practices.

Recognizing these challenges helps in crafting solutions. Next, let’s outline best practices that address these pain points and set your data team up for CI/CD success.

Best Practices for CI/CD in Data Teams

How can data teams implement CI/CD effectively, given the above considerations? Below are seven best practices – tool-agnostic principles – to guide your strategy. These will help ensure your CI/CD pipeline is robust and tailored to data workflows:

1. Version Control Everything (Code, Config, and Schema)

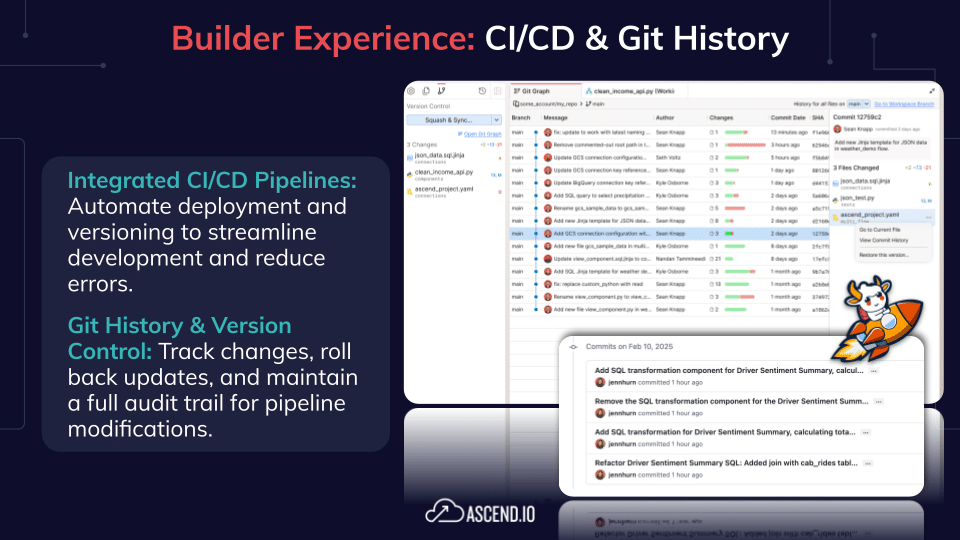

Use Git or another version control for all data pipeline artifacts – not just code, but SQL scripts, pipeline definitions (DAGs), infrastructure-as-code, and even database schema migration scripts. Treat your data warehouse schema as code by tracking changes in DDL scripts or using frameworks that manage migrations. Version control is the foundation of CI; you can’t have reliable integration if people are editing code or SQL ad-hoc in production. By having everything in version control, you enable team collaboration and traceability for every change.

2. Automate Testing at Multiple Levels

Aim for a testing pyramid adapted to data including:

- Unit tests for any code components (e.g., a Python function that transforms a DataFrame) – these run quickly and validate logic.

- Pipeline integration tests – e.g., run an entire pipeline on a small sample of input data and verify the outputs. This catches issues in how components work together.

- Data quality tests – validate the result of pipelines. Automate these checks in CI/CD so that a pipeline only proceeds to deployment if it produces acceptable results on test data.

Automating these tests builds confidence that code changes won’t wreck your data. Importantly, maintain test data sets that are realistic. Synthetic data can simulate edge cases (like nulls or weird characters) which real data has. Also, clean up any test data generated on test databases after tests run, to keep environments tidy.

3. Maintain Environment Parity (Keep Dev/Staging/Prod Aligned)

As noted, environment drift is a risk. Strive to make your development and staging environments as similar to production as possible. This means using the same database engine version, similar hardware configurations (scaled down, perhaps), and ideally a recent subset of production data (if allowed). If your production pipeline runs in Spark on Kubernetes, don’t test only on a local pandas script – incorporate a similar execution environment for tests. The closer your test environment is to reality, the more confidently you can deploy.

4. Continuous Delivery with Guardrails:

For deployment, automate as much of the release process as you can. This might involve deploying updated ETL code to a production orchestrator (e.g., updating Airflow DAGs or releasing a new dbt project version) and applying schema changes. Use a CD pipeline to promote code from git into production systems in a repeatable way – no manual copying of SQL or clicking UIs.

However, give yourself guardrails: for data pipelines, a manual approval step before deploying to production is common, especially if the pipeline impacts many downstream users. Continuous Delivery can still be achieved by automating everything up to the point of final deployment, then having a data lead review and hit “go”.

5. Monitoring, Alerts, and Post-Deployment Validation

CI/CD doesn’t stop at deployment – what happens after you release a change is equally important. Establish monitoring on your data pipelines and quality. For instance, set up alerts for job failures, data delay (e.g., if a pipeline hasn’t produced data by its expected time), or data quality anomalies in production. Many teams forget this part and treat deployment as the finish line.

6. Plan for Failures: Rollback and Recovery

Even with tests and monitoring, failures happen. A truly mature CI/CD setup for data includes rollback mechanisms. If a pipeline deployment goes bad – say it starts writing corrupt data or crashes – you should be able to quickly revert to the last known good state. This might mean re-running the last stable code version, restoring a previous table schema, or backfilling data from backups.

Practice recovery scenarios: How would we restore yesterday’s pipeline if today’s deployment fails? Have scripts ready to drop in the previous ETL job or rebuild a table from backup. The CI/CD pipeline itself can assist here; for example, use version tags so you can redeploy an older version of the pipeline quickly. Also, maintain backups or data snapshots if the pipeline change might modify data irreversibly (for instance, if a job overwrites tables, keep a backup copy before deployment). You can take advantage of modern time-travel features available in cloud data warehouses like Snowflake and BigQuery, which allow you to query historical versions of tables or roll back data changes with minimal effort.

7. Foster a DataOps Culture and Collaboration

Tools and processes are only as good as the team using them. Encourage practices that get the whole data team onboard with CI/CD. For example:

- Conduct CI/CD training sessions for data engineers and analysts to learn the workflow.

- Make CI/CD visible – display build status badges, celebrate when the team reaches X days with green builds, etc.

- Encourage cross-team knowledge sharing. Perhaps have a software engineer experienced in DevOps mentor the data team, and in turn, data engineers can educate DevOps folks about data-specific challenges. This cross-pollination of skills is invaluable.

- Finally, get management buy-in to allocate time for building CI/CD rather than just delivering features. Emphasize that this is an investment in quality and velocity that will pay off by reducing firefighting and enabling the team to scale output.

Each of these best practices helps mitigate the challenges we discussed earlier. Implementing them might be gradual – and that’s okay.

Conclusion: Turning CI/CD Into a Data Team Strength

Implementing CI/CD best practices in a data team is a journey, not an overnight switch. It requires balancing technical solutions with team culture changes. Start by getting the fundamentals right – version control, automated testing, and a simple pipeline that runs on each commit. Then iterate: add more sophisticated data quality checks, improve your deployment automation, and refine monitoring.

Remember that the challenges are surmountable. Yes, data pipelines bring unique complexities to CI/CD, from handling huge datasets to dealing with evolving schemas. But with a clear strategy, you can tackle these one by one.

In the end, CI/CD for data teams is about trust and velocity: trust that your data is correct and pipelines are reliable, and velocity to deliver new data features quickly.