Data pipelines are integral to business operations, regardless of whether they are meticulously built in-house or assembled using various tools. As companies become more data-driven, the scope and complexity of data pipelines inevitably expand. Without a well-planned architecture, these pipelines can quickly become unmanageable, often reaching a point where efficiency and transparency take a backseat, leading to operational chaos.

That’s where we step in. This article aims to shed light on the essential components of successful data pipeline architectures and offer actionable advice for creating scalable, reliable, and resilient data pipelines. Ready to fortify your data management practice? Let’s dive into the world of data pipeline architecture.

What Is Data Pipeline Architecture?

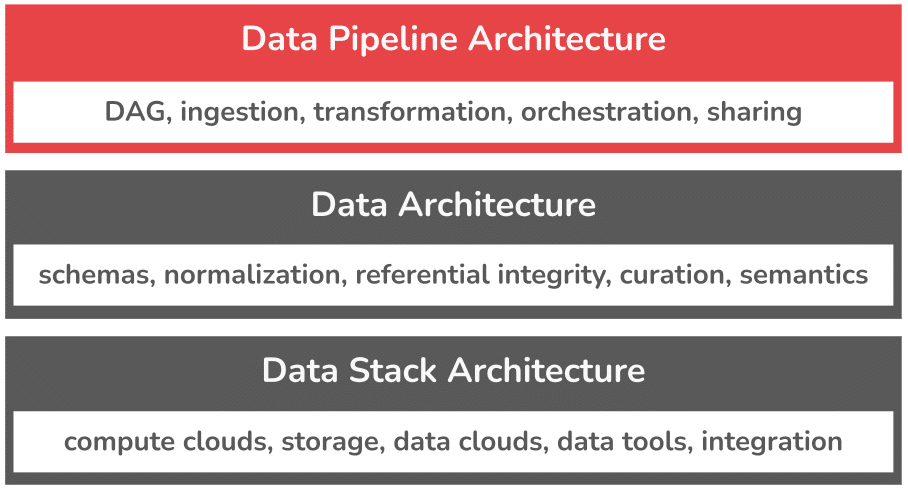

Data pipeline architecture is a framework that outlines the flow and management of data from its original source to its final destination within a system. This framework encompasses the steps of data ingestion, transformation, orchestration, and sharing. It delineates how data moves, where it goes, and what happens to it along its journey.

Now, you might ask, “How is this different from data stack architecture, or data architecture?”

- Data Stack Architecture: Your data stack architecture defines the technology and tools used to handle data, like databases, data processing platforms, analytic tools, and programming languages. It refers to the combination and integration of these technologies to gather, process, and analyze data, and the infrastructure used to power, store, and secure your data.

- Data Architecture: On the other hand, your data architecture defines the models, schemas, rules, policies, and standards that govern data across your organization. It is about designing your organization’s data assets for easy understanding, maintaining integrity, and effective use regardless of the specific data stacks.

Data pipeline architecture provides the framework that organizes and operates the processing of data throughout your data stack. It’s the backbone that supports the movement of data from one point to another, ensures its availability where and when it’s needed, and applies the computational logic to deliver data in the actionable form required by the business.

In short, the data stack architecture is the technology foundation and source of running costs; the data pipeline architecture is the source of efficiency in operational processes; the data architecture is the source of clarity and utility to the business. Many organizations intermingle these concerns and don’t maintain sufficient decoupling between them, resulting in monolithic brittle architectures that rob them of opportunity, agility, and efficiency at scale.

Why Should You Care About Data Pipeline Architecture?

A well-planned architecture provides the decoupling that untangles data technology, operations, and business so that teams can achieve efficiency, resilience, and cost-effectiveness in developing the company’s data and decision assets. It’s the foundation that accelerates your velocity and agility in building data applications. Let’s dig deeper:

1. Harnessing Data for Insights

Data pipelines are the cornerstone of unlocking analytics, business intelligence, machine learning, and data-intensive applications. Your data pipeline architecture is the blueprint for how data is processed to unlock this value. It’s the difference between harnessing your data effectively or leaving untapped potential buried in the noise.

2. Boosting Your Bottom Line

The ROI on your pipeline investment depends on the cost of building and running them. Your data pipeline architecture directly influences these costs – from efficiently utilizing infrastructure for data ingestion and transformation, to avoiding reprocessing with resilient handling of errors and failures. The architecture also significantly affects the productivity of your team and the cost-effectiveness of your data operations. Does your current architecture provide the transparency to tune and control these costs?

3. Powering Innovation

Today’s fast-paced business demands the ability for data teams to quickly build and adapt data applications, and your data pipeline architecture can either facilitate this agility or stifle it. However, an agile pipeline architecture can be disrupted by clumsy integration seams in the underlying data stack, especially brittle hand-written orchestration logic that can cause interminable costs and delays in the data pipelines. How quickly can you respond to new data opportunities with your current architecture?

4. Automating Intelligence

Data automation is maturing quickly, and adopters are benefitting from faster insights and more reliable decision-making. The structure of your data pipeline architecture is a key determining factor for how far automation can be achieved in your organization. Leading organizations are implementing intelligence not only in their business analysis, but also in how their data pipelines operate. Precise orchestration, smart error correction, reliable fault detection, and ease of human intervention are just some of the capabilities that automation can bring to the table.

Read More: What Are Intelligent Data Pipelines?

5. Transparency and Observability

Building trust and confidence in your data to make business-critical decisions requires not merely building data pipelines. It requires a pipeline architecture based on complete transparency and observability for all stakeholders. Every piece of business logic, every processing sequence, and every state of data, as it flows through the pipelines, should be visible and observable. Do your data pipelines provide constant proof of what’s happening under the hood?

Data Pipeline Architecture Best Practices

Effective data pipeline architectures consist of a few core capabilities that link up to form a coherent whole, and provide just the right amount of decoupling from the data stack and the data architecture itself. Here’s a closer look at the fundamental elements to consider when composing your architecture:

1. The Main Functions of Data Pipelines

Let’s start with a simple design and add complexity only when necessary. Each element in your pipeline architecture should have a single, well-defined responsibility.

- Ingestion: Your data pipeline architecture should anticipate a wide variety of raw data sources to be incorporated into the pipeline. These include internal sources, operational systems, the databases and files provided by business partners, and third-party sources from regulators, agencies, and data aggregators.

- Transformation: Consider the programmability of the steps by which the raw data will be processed and converted into insights and data products. Breaking their logic down into networks of processing steps makes them easier to design, explain, code, and debug.

- Orchestration: Pinpoint the truly critical points at which the flow of networks of data pipelines needs to be controlled. Your architecture for these interdependencies should be based on Directed Acyclic Graph (DAG) principles.

- Observation: When it comes to being able to report and investigate operational details about your pipelines, leave no stone unturned. Pipeline lineage, live status of every operation, query-ability of interim data, error detection and notification, and visualization of logs should all be in scope. Your pipeline architecture should also provide seamless transparency into the resource consumption of the underlying data stack itself.

- Sharing: Sharing the live products of data pipelines goes beyond simply sharing access to a database table. Your architecture should include mechanisms to subscribe to the output of your pipelines, and write their output to destinations where analysts and data scientists do their work.

These basic foundations help keep the scope of your data architecture from spiraling out of control, and can contribute significantly to an efficient launch of your initial data pipelines.

2. Address Pipeline Complexities

However, it’s crucial to realize from the beginning that with success your project will likely expand, and complexity will grow throughout this simple framework. Your data pipeline architecture should anticipate these most common sources of change and growth:

- Multiple Data Sources: However broad your initial scope of data sources might be, your architecture should be easily expandable to handle any type of data formats, data collection methods, data arrival latencies and discontinuities, and various levels of validity. Availability of off-the-shelf connectors is important, as well as the ability to add custom programs for those hard-to-read legacy systems.

- Sequence and Dependencies: One of the trickiest areas of complexity lies in the proper order of operations throughout increasingly complex dependencies in the networks of data pipelines. Your architecture should allow for the possibility that intelligence can automate much of what you want to control, and not force you to code intricate sequences manually.

- Timing and Scheduling: As your data pipelines grow, it becomes important to control costs by minimizing which pipelines in the network are run when, and to selectively process subsets of the data while still delivering on time to meet business needs. Include the ability to manage the execution timing in your pipeline architecture, and anticipate automation to avoid unnecessary complexity.

- Transformation Logic: Once the business begins to trust the output of your data pipelines, their appetite for more refined insights using more interesting data is likely to grow. Your architecture should be able to flex into more sophisticated processing logic, accommodating straightforward SQL statements in some steps, while seamlessly invoking Python and special libraries in others.

- Data Sharing and Activation: Anticipate growth in the distribution of transformed data to different users and applications. Your pipeline architecture should anticipate the need for mechanisms to write the same data to different destinations in different formats, and the ability to daisy chain pipelines so their output is reused strategically by other teams, avoiding duplication and waste of resources.

- Engineering Intervention: Data pipelines are some of the most dynamic parts of the business, subject to revisions, changes in the data, and highly iterative development cycles. Your architecture should ease human intervention to develop and debug every aspect of the pipelines, so that error interventions can be done in minutes, and full development iterations in a matter of hours. Observability and transparency capabilities should be fully aligned to support this rapid style of work.

3. Plan for Growth

The other common dimensions of growth lie in sheer scale. As your data pipeline operation reaches more parts of the business, there are five common dimensions that your pipeline architecture should be prepared to handle without performance degradation.

- Data Volume: Ensure your architecture can scale the underlying data stack to meet the demands of increasing data volume without compromising performance. It should include mechanisms that assure efficiency by minimizing reprocessing, and maintain the integrity of all pipeline functions, including timing, scheduling, validations, transformation logic, and observability.

- Organization: As the business value of your data pipelines grows, your data architecture should anticipate more participants in the design and implementation of pipelines. This includes mature access controls, CRUD controls, sharing controls, integration of DataOps into CI/CD workflows, and usability by differently skilled team members.

- Resources: Eventually, different data pipelines will perform better in different infrastructure environments, because different clouds provide different services. Your data pipeline architecture should be able to harness this diversity and operate across data stacks to unlock cost savings and enable unique differentiation in your pipeline processing.

4. Implement Data Integrity Mechanisms

Data integrity is often an afterthought, and treated as an add-on. We recommend incorporating capabilities that drive data integrity directly within your data pipeline architecture:

- Data Quality Checks: Anticipating automated data quality checks throughout the fabric of your data pipeline architecture will allow the system to detect anomalies at the moment they are processed, and link their detection to integrated alerting capabilities. By making them visible, easily programmed, and reviewed, your DataOps can regularly validate and maintain these checks to ensure they remain relevant as your data and business evolves.

- Monitoring and Alerting: Your architecture should inherently include real-time monitoring of every aspect of your data pipelines, plus mechanisms to alert your data team to detect and resolve issues quickly. The more granular the monitoring and targeting of notifications and alerts, the better. At scale, too many notifications to too many people leads to abandonment, and minor issues can become major problems.

Formally including these mechanisms in your data pipeline architecture helps avoid ad hoc code and hidden tech debt. They also provide the bedrock for clean and transparent operational solutions to validation, accuracy, reliability, and trustworthiness of your data.

These elements are the pillars on which to build an effective data pipeline architecture that is scalable, robust, and efficient, and enables your organization to extract maximum value from its data.

Types of Data Pipeline Architecture and Use Case Examples

Data pipeline architecture can vary greatly depending on the specifics of the use case and the core components outlined above. The most common source of variation arises from the differences between batch and real-time processing requirements.

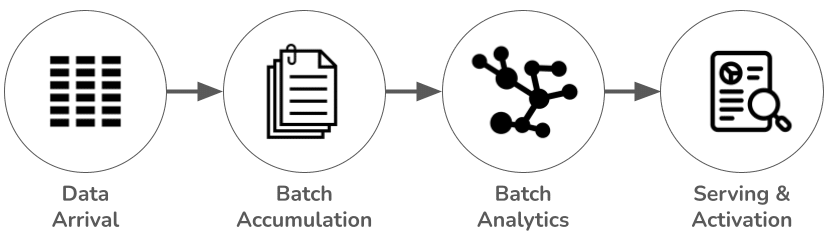

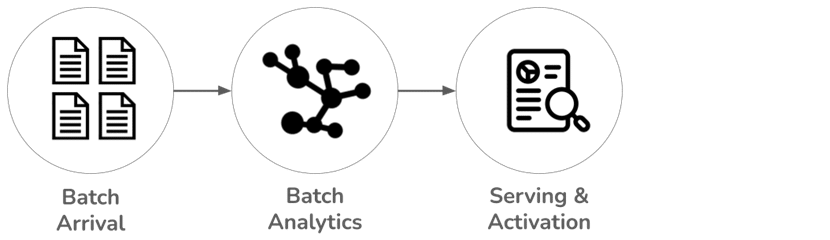

Batch Processing Architecture

Batch processing is a data pipeline architecture where data is collected over certain time intervals, and then processed through the pipeline in batches, often on a scheduled basis. This architecture is commonly employed when real-time insights are not critical, and when the logic of the analytics requires data that spans longer periods of time.

Use Case Example: An e-commerce company seeks to understand user behavior and purchase patterns over the course of a day. By analyzing data accumulated over the entire day, and then comparing patterns between days, important insights emerge that can be translated into advertising, promotion, and dynamic pricing strategies that can improve sales and revenue goals.

Micro-Batch Processing Architecture

This approach leverages the economies of batch processing infrastructure, while still delivering near-real time insights to the business. Ingestion schedules can cycle as frequently as every 2-5 minutes, and end-to-end processing of the pipelines are often less than 5 – 15 minutes, depending on the complexity of the transformation logic.

Use Case Example: An advertising agency seeks to boost shopping basket sizes in the physical stores of a national retail chain. By matching the morning’s inventory data in each store with the shopping preferences and propensity of each known customer to visit the store that day, it targets promotions via the brand’s apps and text messages throughout the day. The brand sends store checkout data to the agency every 10 minutes, with which the agency updates the inventory model for every store and adjusts the promotions toward in stock items.

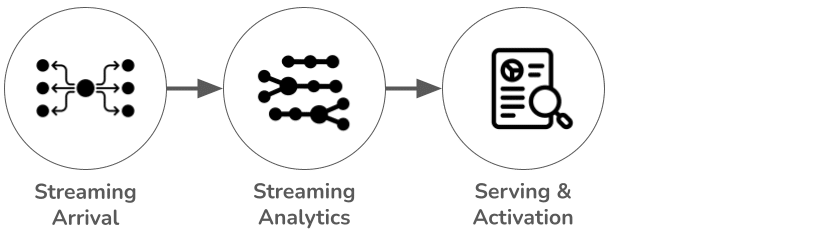

Real-time Processing Architecture

Real-time processing involves continuously ingesting and processing data as it arrives, often within milliseconds or seconds. This approach is crucial in use cases where immediate insights or actions are necessary. As a tradeoff, the processing costs are orders-of-magnitude higher than batch or micro-batch architectures.

Use Case Example: A credit card company seeks to detect potential fraud activity at the time that transactions are submitted for clearance. As each transaction occurs, it is instantly analyzed for signs of fraud, and suspicious activities are flagged in real-time.

Specialty Architectures

The three predominant architectures above are occasionally insufficient for very large data teams, especially where vast varieties of data are in play and many millions can be invested in infrastructure and capabilities. For these situations, some additional patterns have emerged.

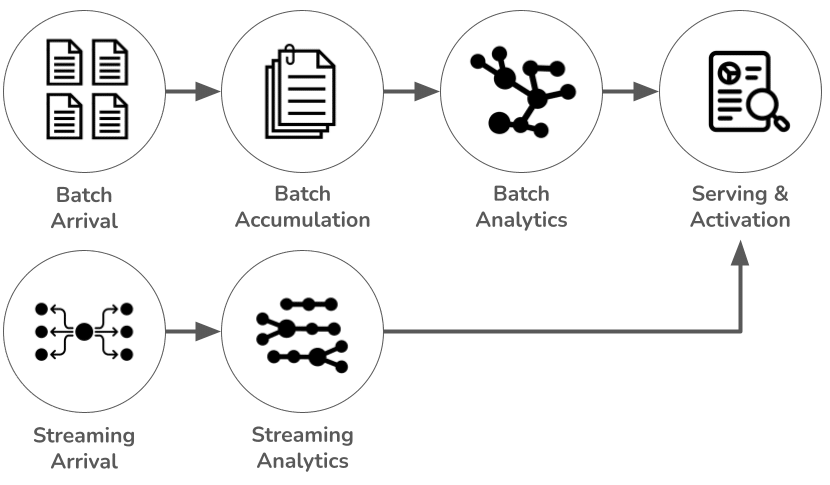

Lambda Architecture

Lambda architecture combines the strengths of batch and real-time processing. It consists of two layers – the batch layer that incrementally processes large data batches for comprehensive insights, and the stream layer that handles real-time data for immediate analysis. The output of both of these is visualized in a serving layer. A key benefit is to offload non-real-time analytics to the far lower operating cost of batch processing data stack.

Use Case Example: A ride-hailing application processes historical trip data in the batch layer to identify long-term trends about driver locations at different times of day, while the stream layer matches current driver locations to passenger requests. The serving layer combines the two analytics for immediate dispatching decisions.

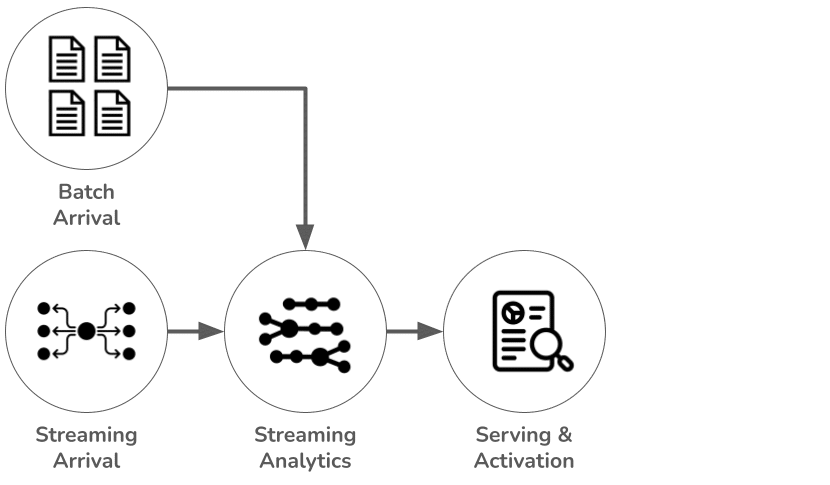

Kappa Architecture

Kappa architecture simplifies Lambda by treating all data as a stream, including data that arrives in batches. A key benefit is to simplify system complexity into a single data stack.

Use Case Example: A social media platform processes all user posts and likes as real-time data streams, performing basic summary analytics that is instantly provided back to the users with a continually updated feed. The platform also generates aggregated trend analytics for its advertising engine.

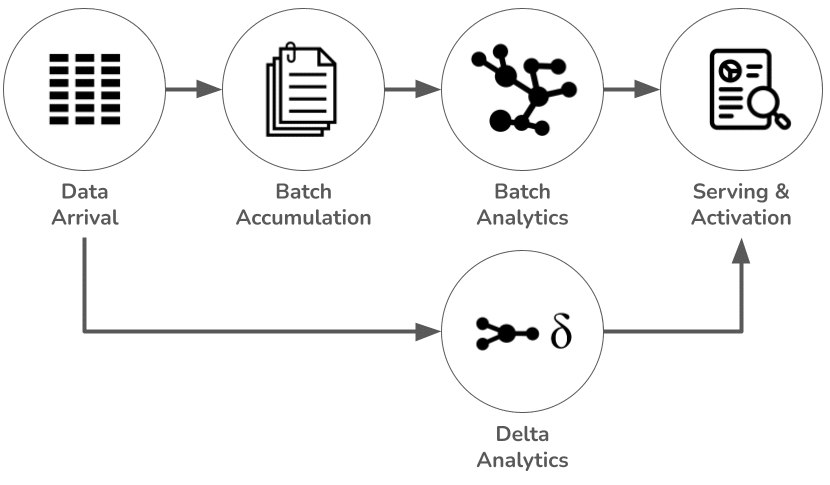

Delta Architecture

Delta architecture implements a Lambda architecture to capture changes in the streaming layer. Then, they are reconciled with baseline data processed in the batch layer. This allows for modifications to “true up” the data before reaching the serving layer. This enhances the accuracy and reliability of the real-time outputs.

Use Case Example: An online advertising platform provides near real-time ad targeting based on long-term user behavior trends, adjusted for the user’s most recent browsing activity to ensure that the targeting is relevant and engaging in the moment.

Each data pipeline architecture has its strengths and weaknesses. The choice largely depends on the specific requirements of your data processing tasks, such as the need for real-time processing, data volume, latency tolerance, and complexity of data transformation and analysis. It also has implications for the underlying data stack, operating costs, and depth of analytics that can be performed with each.

Shifting to a Platform Approach for Data Pipeline Architecture

Treating each new analytical task as a project and crafting new pipelines for each one usually results in costly redundancy, operational inefficiency, and increased complexity. Each new cycle reinvents the wheel, and maintenance activity quickly causes the code base to fragment into irreconcilable tech debt.

The solution lies in shifting your perspective to embrace a platform approach to implementing your data pipeline architecture. This means the use of an end-to-end platform that implements the best practices, accommodates the complexities, and anticipates the growth of your data operations as the use of data in business decisions grows and diversifies.

The use of a data pipeline platform significantly reduces the complexity of constructing and managing data pipelines. It provides seamless interdependence between functions, efficiently manages the cloud resources in the underlying data stacks, enhances transparency, and encourages collaboration among teams. It also supports the evolution of teams, skill sets, security, and growing collaboration between technologists and the business.

In conclusion, opting for a platform approach extends beyond simply managing your data pipelines; it encompasses strategic planning for the future growth and resilience of your business. Implementing your data pipeline architecture with a platform approach provides the long-term efficiency and agility that supports all aspects of your business operations.

Read More: What Is Data Pipeline Automation?