Because of its transformative potential, data has graduated from being merely a by-product of business operations to a critical asset in its own right. But what does a business need to do in order to leverage this asset? Is it possible to treat data not just as a necessary operational output, but as a product that holds immense strategic value? The answer is a resounding yes.

Treating data as a product is more than a concept; it’s a paradigm shift that can significantly elevate the value that business intelligence and data-centric decision-making have on the business. However, transforming data into a product so that it can deliver outsized business value requires more than just a mission statement; it requires a solid foundation of technical capabilities and a truly data-centric culture.

In this blog post, we’ll cover the vital capabilities required to treat data as a product and the enabling elements necessary for fully realizing the benefits of a data product approach.

The Essential Six Capabilities

To set the stage for impactful and trustworthy data products in your organization, you need to invest in six foundational capabilities.

- Data pipelines

- Data integrity

- Data lineage

- Data stewardship

- Data catalog

- Data product costing

Let’s review each one in detail.

Data Pipelines

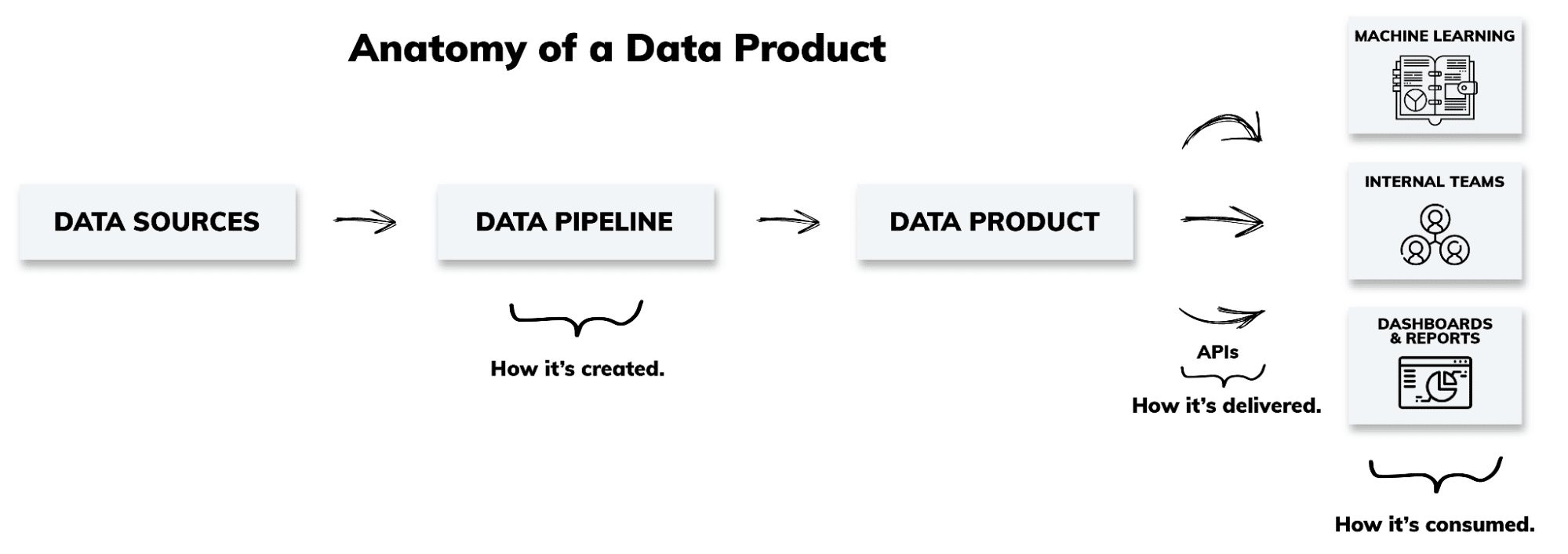

Data pipelines are the indispensable backbone for the creation and operation of every data product. Without them, data products can’t exist. Acting as the core infrastructure, data pipelines include the crucial steps of data ingestion, transformation, and sharing.

Data Ingestion

Data in today’s businesses come from an array of sources, including various clouds, APIs, warehouses, and applications. This multitude of sources often causes a dispersed, complex, and poorly structured data landscape. Data ingestion spans all these sources regardless of origin, and constitutes the initial step of every data pipeline as a critical and integral part of the data product lifecycle.

Without clever ingestion functionality, the risk of introducing bad data into the pipelines is high, compromising the entire system. The results include bad business decisions, and lengthy remediation cycles that bug down data teams with extra work and increased computing costs. The right strategic approach to data ingestion sets the stage for the creation of high-quality, reliable data products.

Data Transformation

The second step in building a data pipeline is data transformation, where the ingested data is combined, refined, filtered, and readied for consumption by decision systems. This is a series of operations that may include data quality assessments and remove inconsistencies, filter and eliminate errors, handle missing values, and more. These operations also ensure that the data is aligned with required formats and quality standards, so it is ready for downstream systems or to be consumed by machine learning algorithms.

Data transformation isn’t limited to just quality enhancement. It is also where data is consolidated, aggregated, or restructured to create more coherent, insightful snapshots of information. Whether it’s the creation of new data combinations or simply restructuring data sets for better understandability, data transformation is key to unlocking the full potential of the data.

Data Sharing

Once data has been ingested and processed through sequences of transformations, it enters the final step in the data pipeline – data sharing. In this step, we can consider the prepared data flows to be data products, made available to the relevant stakeholders, who turn them into actionable insights.

Data sharing goes beyond simply making the data available. It can be thought of as the final “packaging” and disseminating of the data in a format that meets the specifications of different stakeholders, and is accessible, understandable, and usable across the organization. Whether it be the marketing team seeking customer insights, the finance team working on budgeting, or executives crafting business strategies, data needs to be shared in a manner that aligns with their specific objectives and competencies.

While many data teams simply drop the data into a data warehouse table for others to consume, in the grand scheme of data as a product, explicit data sharing plays a pivotal role. It is the stage where data truly becomes a product, delivering tangible value to its end users.

Data Integrity

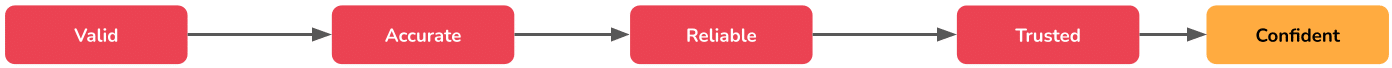

Cultivation of a data-centric culture means engaging business users to trust and use the data. Anyone leveraging the company’s data products to make decisions should feel confident that it’s accurate, meets quality requirements, and is consistently maintained across the business.

The investments in data products are only realized when they are used to fuel analysis, modeling, decision systems, and machine learning algorithms. To ensure data integrity, data engineers should use operations within their data pipelines to validate that data correctly reflects the intended business value, is in the correct format, has a valid schema, is stored securely, lacks missing values, and arrives on the proper cadence.

Data Lineage

Maintaining data lineage means tracking data linkages from source through all the transform operations through to the consumption of the data product. With clear visibility into the complete lineage of their data products, end users should be able to act as confident data stewards by being able to audit pipelines easily, and make sure their data products are functioning as designed, including ingesting, transforming, and merging the data correctly. Data lineage also informs engineers to identify which data pipelines are good candidates for reuse to reduce processing costs, and for root cause analysis of problems that crop up.

Think of data lineage like a step-by-step assembly manual for a piece of furniture – at each step you can see what changes are made to the result of the previous step, and you can trace where every component ends up in the final product. A data lineage diagram shows the full list of changes with just a few clicks of a button. Although point solutions for data lineage exist, fully integrated data pipeline lineage capabilities make superior use of operational metadata and produce better quality and more timely reports.

Data Stewardship

Every company — even departments within a company — has different definitions for even the most common data elements within different datasets. For example, an “Address” field can mean the delivery address in a shipping record, or the billing address in a payment record, or a residence address in a geospatial record, all associated with the same customer. These varying definitions are based on the format, function, origin, and different uses for the data in practice.

Because the processing of data is usually a quite technical endeavor, companies often put data engineering teams in charge of standardizing these definitions. That’s a mistake because engineers are nowhere near as close to either how the data is actually produced during business operations as SMEs are, or to how data products are used to make business decisions. The result is frustrated end users, munging the data themselves to make it useful.

Enter data stewardship. Data stewards are experts in the operations of a particular business unit, such as HR, accounting, or product, who know what data is needed for which decision, and who know how it should be structured for business use and analysis. Each data steward takes on the formalizing of specific data product definitions, and works with data engineers to ensure those products are structured in a way that yields meaningful insights to the rest of the business. Data stewards are thus the best contributors to the content of data catalogs.

Data Catalog

Analysts and ML practitioners usually have a good idea of the data they want to use for their models and for decision-making, but lack the detailed knowledge of data stewards to select which data product meets those needs. Data catalogs help them find and locate the best candidates, and solidify their requests for data products that don’t yet exist. Data catalogs are an efficient way to vet datasets with data stewards, and make them publicly available so stakeholders can discover and self-serve data they need and review attributes and lineage to confirm their choices.

For instance, a stakeholder looking for a database table listing the membership of various organizations should be able to search the data catalog using “member” as a keyword, along with the names of attributes that need to be built into the dataset in order to filter it for their specific use. The results should include membership datasets that fit the criteria, and show details like who the data stewards are, where the data came from, a full lineage of how it was transformed, and when it was last updated.

Creating a data catalog like this takes some technical effort, but more importantly, it requires solid data stewardship to maintain the business definitions and business process associations of each data product. People won’t be able to find the data products they need without accurate tagging and descriptions, leading to costly redundant data pipelines, conflicting data products, and confusion across the business. Good data stewardship and healthy data catalogs are worthwhile investments.

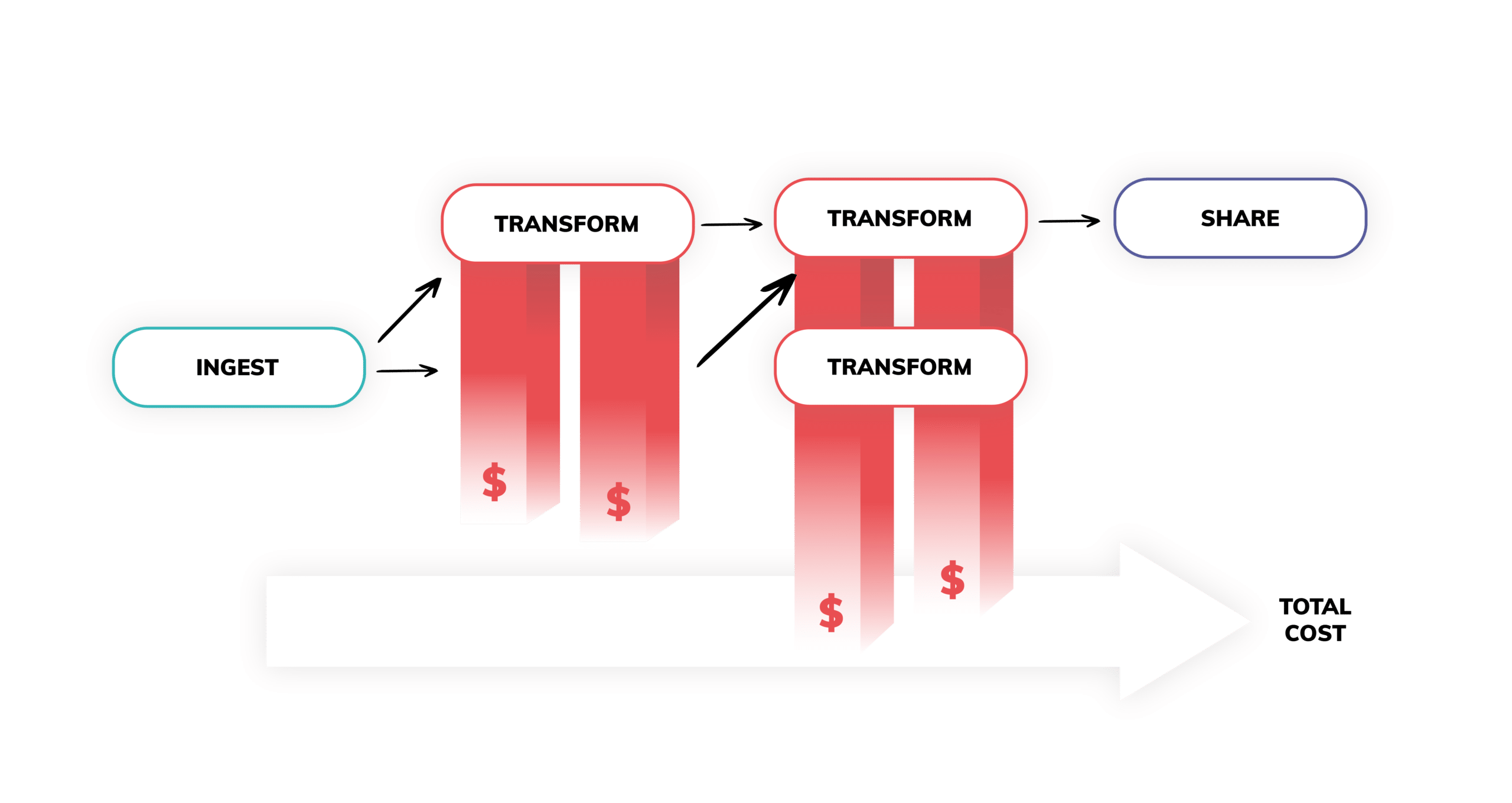

Data Product Costing

With the proliferation of data volumes and the continued adoption of AI and ML in business, data processing costs are becoming a large part of company budgets. Executives are turning to engineering teams to understand and help grow returns from those expenses. To build and operate cost-effective data products, data engineers must be able to detect costly hotspots, streamline their pipelines, and prioritize data products based on cost and value to the business.

For example, if a data product is used to determine where and when a company’s ads should be placed, engineers need to be able to weigh the compute costs of that product against the increases in conversion rates and average basket size. This data product should also be able to handle increased demand, growing data volume, and enriched complexity without causing significant operating costs over time. Data engineers also need fine-grained cost information about data products and the pipelines that run them to optimize the transformations, make informed infrastructure changes, and apply other cost management techniques.

Key Enablers for Data Products

These critical capabilities lay a strong foundation for the creation and operation of high-quality data products that engage end users and enable them to actually deliver business value with data-driven decision-making. But how do you unlock these capabilities? It turns out there are four key enablers to tie it all together:

- Single pane of glass: Data integrity, stewardship, and data catalogs can only result when data engineers, analysts, IT, and executives can share a common, multi-faceted view where data is coming from, how it is processed, and how it is used. With a single pane of glass that surfaces every aspect of your data pipelines, errors can be detected and fixed in real time by engineers, SMEs can find existing data products and dream up new ones, and all stakeholders using company data can feel confident in its integrity and accuracy.

- Automated change management: Data pipelines, data integrity, and data lineage depend on reliable change management and propagation. To maintain a consistent, trustworthy data environment, every change made at a particular point in a data pipeline must be propagated to all other transformations, data pipelines, and affected data products downstream with minimal downtime and minimal reprocessing. Doing this work manually causes major disruptions, and opens data engineers up to complex administrative and maintenance mistakes that can have devastating consequences on the decisions end users make with the data.

- Actionable data quality: Stakeholders are counting on having the right data in the right format at the right time. Data quality is directly related to data integrity, spanning data validations at data ingestion through to good stewardship of finished data products. But with hundreds of complex data pipelines interwoven for efficiency and reuse, it’s impossible for data engineers to track the accuracy, lineage, and completeness of their data products by hand. For these reasons, a rich set of actionable data quality enablers, fully integrated with automation and a single pane of glass, have become table stakes for the operation of data products at scale.

- Fine-grained observability: Last enabler of data products is observability. Organizations need a comprehensive understanding of the health and performance of their data products in real time as well as retroactively to identify and fix anomalies before they are used for decision making. Data observability contributes to and surfaces the integrity of data lineage, and becomes an important source of operational data for data catalogs. It also is the starting point from which data engineers pinpoint problems and costs in data products, and take measures to address issues and reduce overall costs.

The Final Preparation Step: Data Pipeline Automation

Data pipeline automation is the overarching framework that brings all the capabilities and enablers of data products together. It bridges the gaps between individual capabilities that are otherwise obtained through brittle integration of various point solutions, and constitutes the core of a holistic, self-contained, self-correcting data system.

Companies that fully embrace data pipeline automation replace their disjointed “modern” data stacks with end-to-end processes that intelligently combine all six capabilities. This reduces strain on the data engineering team and increases transparency to internal stakeholders, setting them up with order-of-magnitude improvements in productivity and capability to make data products that bring unprecedented business value.

Eager to start your journey to data pipeline automation?

Learn more about what it is, how to do it, and what it can do for your organization by reading or downloading our “What is Data Pipeline Automation” whitepaper.

Additional Reading and Resources