Data engineering is evolving rapidly, driven by advancements in AI and automation. As teams face increasing demands, the need for efficient and effective solutions has never been greater.

This article (based on the webinar below) explores how data engineering teams can leverage AI and automation to enhance productivity and tackle current challenges. We will explore some frameworks necessary for enabling adoption of these technologies and provide insights into building a more productive data engineering environment.

The Evolution of Data Engineering

Over the past two decades, data engineering has transformed significantly. Initially, data teams focused their effort on scaling storage and processing capabilities. Companies like Snowflake and Databricks have made substantial strides in this area, enabling teams to store and process vast amounts of data with relative ease.

Now, the real challenge lies in enhancing team productivity to produce valuable insights with that data.

Yet today, a single data engineer can achieve what once required a team of five or more. This shift presents a unique opportunity to rethink how teams operate. By leveraging new technologies and methodologies, organizations can empower their data engineers to work more efficiently and effectively to drive business impact.

Current Challenges in Data Engineering

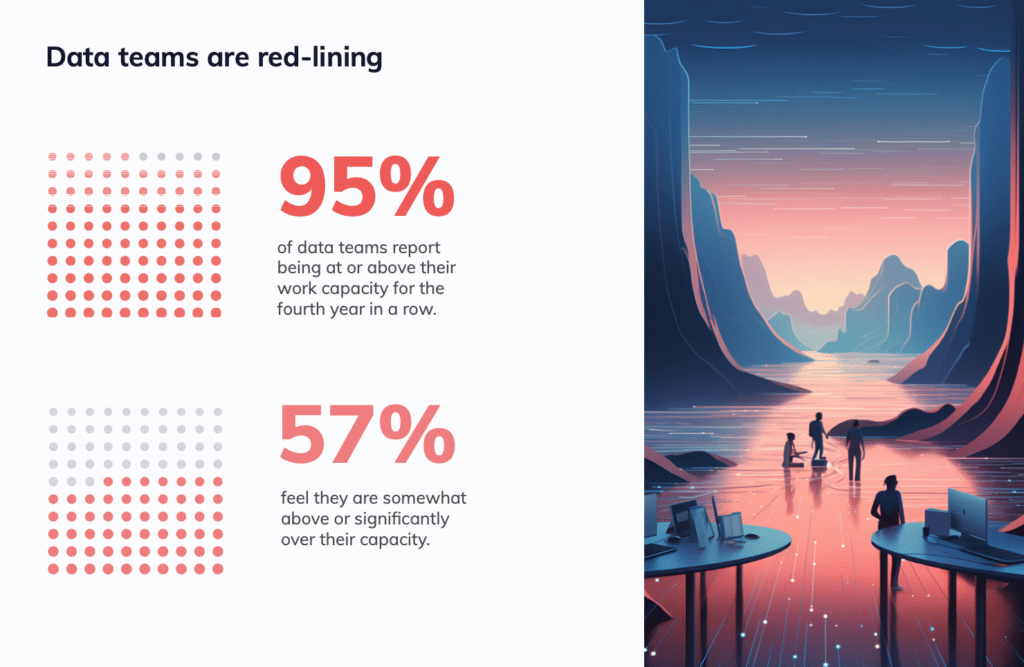

Despite the advancements, data engineering teams are grappling with significant challenges. Many teams are operating at or above capacity, struggling to meet the increasing demands from the business. This situation leads to burnout and inefficiencies, as teams find themselves bogged down in repetitive tasks rather than focusing on strategic initiatives.

Organizations must recognize that simply adding more personnel is not a sustainable solution. Instead, creative approaches are needed to help teams overcome these challenges and maximize their potential.

The Role of AI and Automation

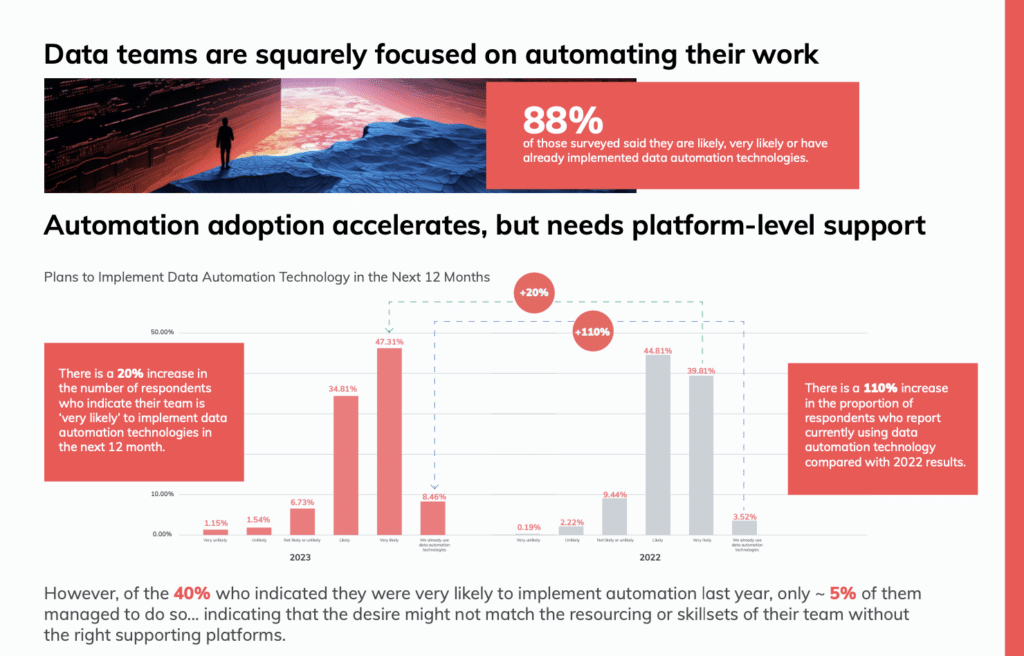

To meet these growing business demands, data teams are increasingly turning to AI and automation to help overcome current challenges and boost productivity. By automating mundane tasks, teams can free up time to focus on more complex and valuable work. This shift not only enhances productivity, but also improves job satisfaction among team members.

It’s no wonder that in our annual survey, 88% of those surveyed are likely or very likely to implement automation technology in 2024.

Frameworks for AI and Automation Adoption

In 2022, Gartner famously estimated that 85% of all AI projects fail to produce value for businesses. This sobering statistic has haunted well-intentioned data teams for years.

Successfully integrating AI and automation into data engineering processes is no small feat, but it is achievable. Over the past year, our team has been hard at work developing innovative AI agents and new automation tools for our customers.

Based on our experience at Ascend, I’ve identified four key frameworks that provide a structured approach to implementing these technologies. These frameworks ensure teams are equipped with the necessary tools and support.

My hope in sharing this is that your team can leverage these insights and become part of the 15% of AI projects that drive significant impact for your organization.

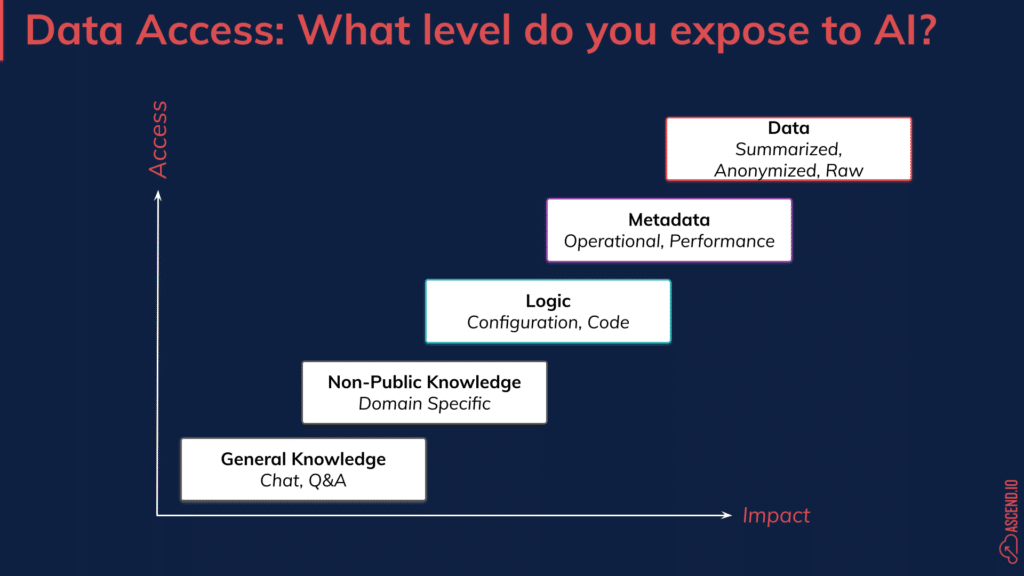

AI Implementation Framework #1- Levels of Access

The first framework focuses on defining levels of access for AI and automation tools. Establishing these levels is crucial for managing risks and building trust in AI and automation systems within the organization.

Organizations must determine the extent of access to data and code they are willing to grant these systems. This can range from basic access to general knowledge to more sensitive operational data.

Here’s a breakdown of the different levels of access:

General Knowledge Access:

This level includes access to publicly available data and non-sensitive information. It allows AI tools to perform fundamental tasks without risking sensitive data exposure. Examples include accessing public datasets, general business information, and publicly available statistics.Access to Non-Public Knowledge:

At this level, AI systems can access internal documents, non-confidential reports, and other proprietary information not available to the public. This helps the AI tools provide more contextual and relevant outputs, improving their utility without compromising critical data security.Access to Code and Logic:

Allowing AI systems to access and understand the organization’s codebase and logical frameworks enables more sophisticated automation and integration. This access is controlled and monitored to ensure that AI tools can interact with the software environment safely, enhancing development efficiency and operational consistency.Metadata Access:

This level involves granting AI systems access to operational metadata, which includes information related to the day-to-day data operation. Such access allows AI tools to optimize processes, predict maintenance needs, and improve operational efficiency. [Learn how we use metadata to automate 90% of manual data pipeline maintenance.]Data Access:

This highest level of access allows AI systems to interact directly with business data. This could include summarized or anonymized data, or raw data, depending on sensitivity and business needs. This access is critical for advanced analytics, machine learning model training, and real-time decision-making. Given the sensitivity, this access level must be strictly controlled and monitored to prevent data breaches and misuse.By establishing clear levels of access, organizations can ensure that AI and automation tools operate within defined boundaries. This framework not only helps in managing risks but also builds trust among stakeholders by ensuring that data privacy and security are prioritized.

Clear access levels also facilitate compliance with regulatory requirements, enhance transparency, and improve the overall governance of AI initiatives within the organization.

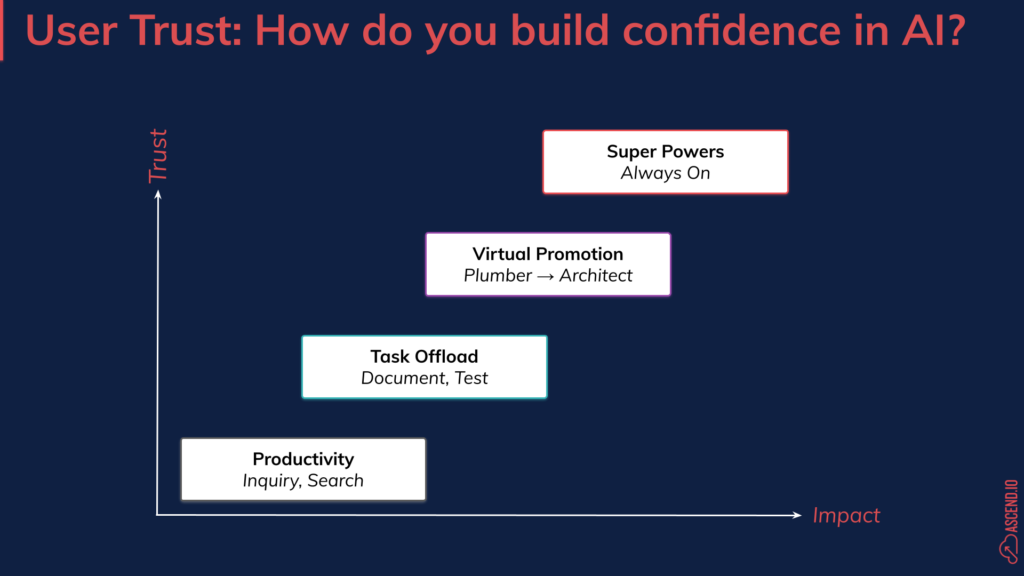

AI Implementation Framework #2- Establishing User Trust

The second framework emphasizes the importance of user trust in AI and automation systems. Even if organizations have confidence in the technology, it is crucial for users to embrace it as well. Building this trust involves gradually increasing the level of trust users have in the systems through a series of strategic steps:

1. Initial Adoption of AI Tools – Basic Productivity

The first step in building trust is to introduce AI tools in a way that demonstrates their value without overwhelming users. This can involve implementing AI in low-risk, high-reward scenarios where its benefits are clear and immediate.Examples include using AI to ask questions or search documentation. In this phase, leaders should work to provide training and resources to help users understand how the AI works and its potential benefits to ease the transition.

2. Higher Task Offloading to AI

As users become more comfortable with AI tools, the next step is to gradually increase the complexity and importance of tasks delegated to AI. This can include tasks that require more nuanced decision-making, such as predictive analytics, trend analysis, or anomaly detection.Regular feedback loops should be established to address any issues and continuously improve the system’s performance, reinforcing user confidence.

3. Virtual Promotion of Human Team Members

The concept of virtual promotion involves empowering employees to reimagine their roles within the organization to maximize the benefits of AI tools. This means enabling team members to move “left” in the workflow hierarchy, allowing engineers to act more as architects, designing workflows and strategies, while the AI takes on the task of writing and optimizing code.This shift not only enhances the strategic capabilities of the team but also leverages AI to handle repetitive and detailed work, thus fueling overall productivity and innovation.

4. Developing Superpowers

Ultimately, AI tools should be seen as augmenting human capabilities, giving users “superpowers” that enhance their productivity and effectiveness. This can involve AI-driven insights that help users make better decisions, automation that frees them from mundane tasks, and intelligent systems that anticipate their needs.By positioning AI as an enabler of enhanced performance, users are more likely to embrace and trust the technology.

Fostering user trust in AI and automation tools is essential for maximizing their effectiveness. Organizations can achieve this by ensuring transparent communication about how AI systems work, addressing user concerns proactively, and demonstrating the tangible benefits of AI. Additionally, involving users in the development and refinement of AI tools can create a sense of ownership and alignment with organizational goals.

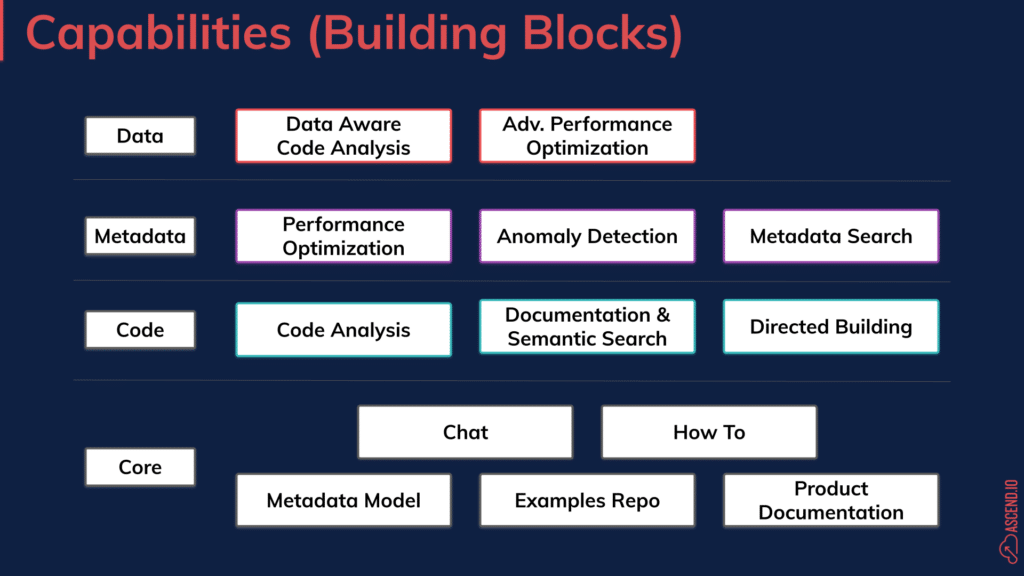

AI Implementation Framework #3 – Scaffolding Capabilities

The Capabilities Framework builds off the Levels of Access and User Trust Frameworks to illustrate how teams can deliver impact incrementally over time. It encourages organizations to start small and gradually build on foundational capabilities. This approach prevents overwhelming teams and allows for incremental improvements while ensuring that each layer builds on the previous one to achieve more at each level.

Core Capabilities:

At the core level, organizations should focus on utilizing general knowledge resources to assist users. These resources can include tutorials, chat support, how-to guides, and example repositories.By providing easy access to this information, users can quickly learn how to interact with AI tools and apply them to basic tasks. Core capabilities help users get comfortable with AI, laying the groundwork for more advanced functionalities.

Code Capabilities:

Building on the core capabilities, the next layer involves granting AI systems access to user code. This enables more sophisticated capabilities such as semantic search, code analysis, and directed building.With access to the codebase, AI tools can provide intelligent suggestions, detect potential issues, and assist in writing and optimizing code. This layer empowers users to leverage AI for improving code quality and development efficiency.

Metadata Capabilities:

Access to metadata allows AI systems to deliver enhanced optimization and diagnostic capabilities. At this level, AI tools can perform tasks such as anomaly detection, metadata search, and process optimization.By analyzing metadata, AI can identify patterns and provide insights that help improve system performance and reliability. This layer builds on the code capabilities by adding an extra dimension of data context, enabling more precise and effective AI interventions.

Data Capabilities:

The highest level of the framework involves access to business data, enabling advanced AI capabilities. With this access, AI systems can perform data-aware code analysis, advanced performance optimization, and more.These capabilities allow AI tools to understand and interact with the actual data used by the organization, providing deeper insights and more targeted improvements. Data capabilities build on all previous layers, integrating general knowledge, code, and metadata to create comprehensive AI solutions.

By focusing on these capabilities, organizations can create effective AI solutions that address specific needs at each level. The scaffolding approach ensures that each new capability builds on a solid foundation, promoting gradual and sustainable growth in AI adoption.

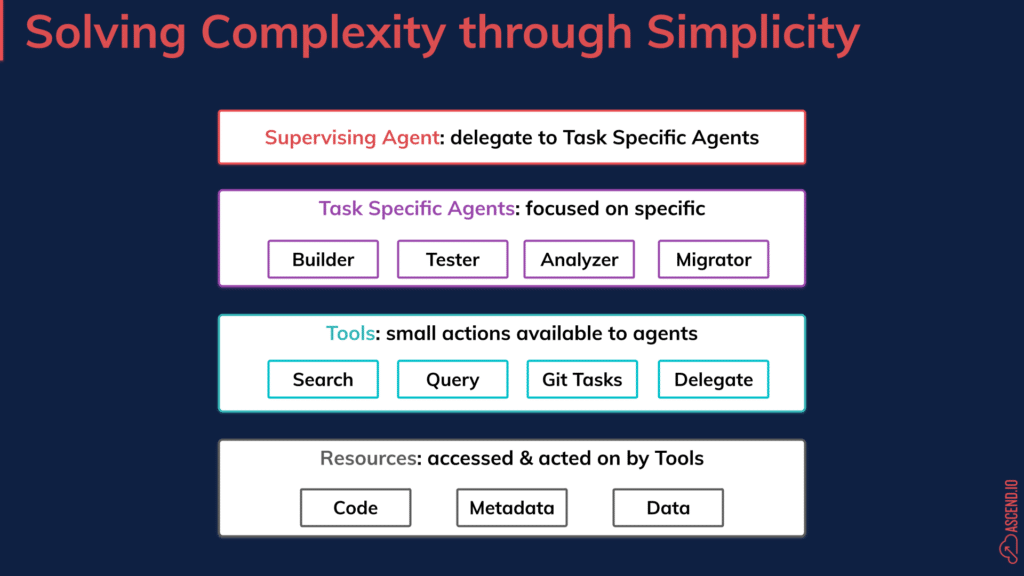

AI Implementation Framework #4 – The Agentic Model

This framework provides a practical roadmap for implementing AI and automation within the organization. It emphasizes the importance of defining clear objectives, establishing workflows, and measuring outcomes using a multi-agent approach. Building following this framework includes 3 basic steps:

Define Core Tools and Resources:

Identify and implement the essential AI and automation tools that will serve as the foundation for your strategy. These tools should align with your organizational goals and integrate seamlessly into existing workflows.Establish Task-Specific Agents:

Develop AI agents tailored to specific tasks within your organization. These agents can handle repetitive and time-consuming tasks, allowing your team to focus on more strategic activities.Implement Supervising Agents:

Introduce supervising agents that oversee the performance and accuracy of task-specific agents. These agents ensure that AI tools operate effectively and align with organizational standards and objectives.Practical Strategies for AI & Automation Implementation

Beyond the four frameworks, there are a few other practical steps that leaders can take to ensure their AI and automation projects succeed. Here are some practical strategies organizations can adopt that worked well for us at Ascend:1. Start with Pilot Projects:

Begin with small-scale pilot projects to test AI and automation tools in a controlled environment. Choose projects that have clear objectives, manageable scope, and measurable outcomes. Allow teams to experiment, learn, and refine their processes without significant risks. Successful pilot projects can serve as proof of concept, demonstrating the value of AI and automation and paving the way for broader implementation.2. Encourage Team Collaboration:

Foster a collaborative culture for team members to build and learn together. Encourage cross-functional teams to work together on AI and automation projects, promoting knowledge sharing and collective problem-solving.We have seen the benefits of this first hand at our Quarterly Hackathons. For the past several quarters, cross-functional teams have innovated with unfettered enthusiasm, working on projects that have impacted our product, marketing, and sales productivity.

3. Provide Training and Resources:

Equip your team with the necessary training and resources to effectively use AI and automation tools. Offer workshops, discussion groups, and Slack channels to help team members develop the required skills. Provide access to documentation, best practices, and support resources.4. Measure and Iterate on Outcomes:

Establish a robust framework for measuring the performance and impact of AI and automation initiatives. Define key performance indicators (KPIs) and metrics to track progress and outcomes. Regularly review and analyze the results, gathering feedback from users and stakeholders.Continuous improvement is essential to adapt to evolving needs and maximize the benefits of AI and automation.

Future Insights and Trends

As AI and automation continue to evolve, data engineering teams must stay informed about emerging trends and technologies. Organizations should invest in ongoing training and development to ensure their teams are equipped with the latest skills and knowledge.While these frameworks may evolve as AI does, it is also essential that data teams are prepared to adapt at the speed of AI.