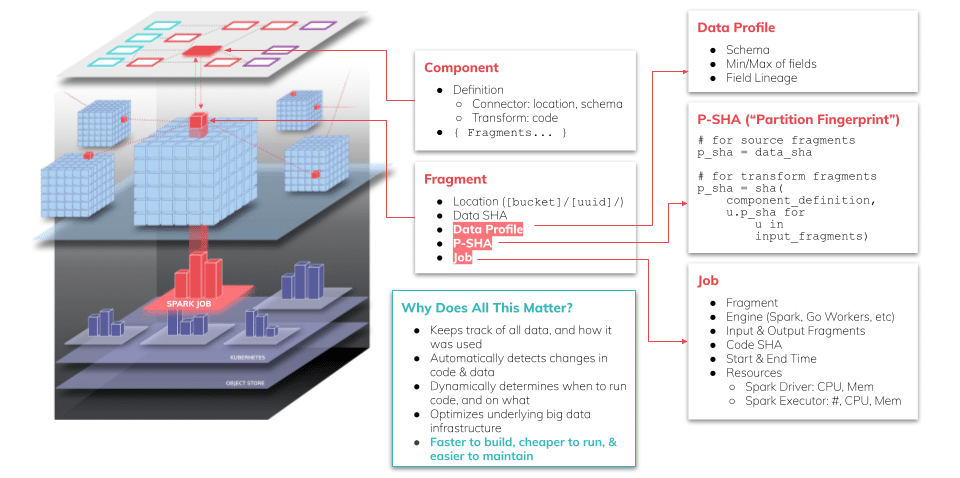

As many Ascend users know, our automation engine is backed by a set of integrity checks on both code and the originating data that helps us intelligently detect when data needs to be processed (and when it is safe to not). Much of this is tied to our use of a declarative model which you can read about in our whitepaper on pipeline orchestration models, or watch a tech talk on here.

The backbone of this approach relies on the ability to quickly and efficiently detect changes in code and data. In data pipelines, when transformations are deterministic in nature, their outputs change only when their inputs change. Hence, when there is a change in upstream data (new data, or even changes to old data), we can intelligently detect those changes and reprocess the relevant pieces. Moreover, once that data has been processed, we can proceed forward with the next data operation, and so on, and so on, until complete.

For those of you who have watched our tech talks before, you’ve probably seen something like this:

But what if input data changes, yet the output data remains the same? We clearly need to process that first change in data, but what if we could be smart enough to confirm that the output data has not changed despite changes in input data (or even code 🤯 ) and avoid the unnecessary downstream processing as we already know it’ll be the same result (since unchanged input data into an unchanged deterministic operation produces unchanged output data).

Introducing D-SHAs

Well, we are excited to announce our support for just that! The feature is called D-SHAs, short for Data SHAs. SHA stands for Secure Hash Algorithm, a mechanism often used in cryptography to create a compact digest, or in colloquial terms, a fingerprint that uniquely identifies a block of data. While we use many (many, many) SHAs of code, data, combinations of code and data, and more, D-SHAs are unique in that they are the first to actively check intermediate data and halt downstream processing that is simply not necessary.

There were a few challenges encountered in implementing this feature. First, we wanted to find an implementation that works without too much computational overhead. Second, the nature of Spark distributed processing systems, as those of who have worked with Spark in the past may know, does not have a deterministic ordering of output records (the ordering can be made deterministic by sorting, which comes at the cost of our first goal).

To accomplish the first goal, we surveyed a variety of hashing implementations. Internally, Ascend uses SHA256 to generate fingerprints for configuration metadata. For generating a signature of the actual data, we surveyed a few different options. We looked at Spark’s built-in XXHash64, SHA256 on the external row, and Murmur3_128, both on the external row with mapPartitions, and with a code-generated version accessing the internal storage by extending Spark’s HashExpression. Use of the spark built-in hash expression required some additional effort to handle the Map data type in a non-order dependent way (the existing implementation is order-dependent on the map insertion order, hence https://issues.apache.org/jira/browse/SPARK-27619). We ultimately settled on the Murmur3_128 version on the external row, as it provided the best tradeoff between computational overhead, implementation complexity, and collision resistance.

- SHA256 – A cryptographic hash function giving significantly more overhead that was readily apparent when processing large datasets

- XXH64 – Highest performance, but only giving 64 bits of entropy

- Murmur128 on internal row + code gen – In our testing, we only observed a minor performance increase vs. interpreted on external row, will have potential risk of instability if internal implementations change

|

Implementation

|

Overhead

|

Implementation Complexity

|

Collision Resistance

|

|---|---|---|---|

|

Spark built-in XXHASH64 with patch

|

+

|

+++

|

+++

|

|

SHA256 on external spark row

|

++++++++

|

++

|

+++++++++++++

|

|

Murmur3_128 external spark row

|

++++

|

++

|

++++++++

|

|

Murmur3_128 on internal spark data

|

+++

|

+++++

|

++++++++

|

Next, we had to solve for the fact that records may be in any order and aggregating record hashes by re-hashing the hashes would not suffice, because it would be order-dependent. A simple XOR would not work either, because then duplicate rows would cancel each other out. We reviewed how the Guava library does unordered aggregation of hashcodes, and discovered they do a modular addition over the individual bytes. We elected not to copy their approach, since that would have the same issue as XOR, just needing 256 copies of a row to be equivalent to 0 copies.

The implementation we decided on was to treat the bytes of the respective row fingerprints as a big integer and perform a sum over the data, producing an aggregated fingerprint that is order independent, and resistant to duplicate rows (2^128). To add further safeguards against collisions, we incorporate the data fingerprint with the record count and schema using SHA256 to create the final D-SHA.

Lastly, to limit the impact on end-to-end runtime, we run our D-SHA calculations in parallel with the write to storage.

Ready To Get Started With D-SHAs?

Want to start taking advantage of this capability today? Odds are, it’s already enabled in your environment and you’re benefiting from the advanced automation. If you’re not sure, however, just ping us in our Slack community and we’d be happy to help.

Want to learn more about D-SHAs and our other features? Schedule a live demo with one of our data engineers!