What is Data Pipeline Orchestration?

Data Pipeline Orchestration automates the movement and transformation of data between various systems, ensuring data is accurate, up-to-date, and ready for analysis. This process unifies data from different sources and is essential for effective data management. It differs from Data Orchestration.

Updated November 14, 2024

The terms ‘data orchestration’ and ‘data pipeline orchestration’ are often used interchangeably, yet they diverge significantly in function and scope. Understanding these differences is not just an exercise in semantics; it’s a critical distinction that, if overlooked, could lead to misallocated resources and substantial financial implications when developing data infrastructure.

Data orchestration refers to a wide collection of methods and tools that coordinate any and all types of data-related computing tasks. This includes job process sequencing, metadata synchronization, cataloging of data processing results, triggering data loads and quality checks, detecting when one task is done and triggering another, and setting the timing of scripts and system commands.

In contrast, data pipeline orchestration is a more targeted approach. It zeroes in on the specific tasks required to build, operate, and manage data pipelines. Here lies the crucial difference: data pipeline orchestration is inherently context-aware. It possesses an intrinsic understanding of the events and processes within the pipeline, enabling more precise and efficient management of data flows. In contrast, general data orchestration lacks this level of contextual insight.

This article delves deep into data pipeline orchestration, exploring how its context-aware capabilities and potential for extensive automation can revolutionize the way data pipelines are managed, offering a strategic advantage in data handling and processing.

If you are interested in the broader context of data orchestration, including pipeline considerations, here is our dedicated article on the broader topic.

What Is Data Pipeline Orchestration?

Data pipeline orchestration is the scheduling, managing, and controlling of the flow and processing of data through pipelines. At its core, data pipeline orchestration ensures that the right tasks within a data pipeline are executed at the right time, in the right order, and under the right operational conditions.

Imagine each data pipeline as a complex mechanism composed of numerous interdependent components. These components must work in harmony, driven by a defined operational logic that dictates the activation, response, and contribution of each segment to the collective goal of data processing.

The essence of data pipeline orchestration is its seamless management of these interactions. It goes beyond mere task execution. It’s about orchestrating a dynamic, interconnected sequence where the output of one process feeds into the next, and where each step is contingent on the successful completion of its predecessors.

The Need for Orchestration in Data Pipelines

Data pipelines are embedded in highly technical execution environments that present challenges of process timing, workload distribution, and computational resource allocation. At the highest level, data pipelines can be seen as a seamless progression of data through various stages of processing. Universally, these stages span extraction, through transformation stages, and finally to loading into target systems (a.k.a ETL).

While this simple beginner’s perspective seems approachable enough, there are many aspects of data pipeline orchestration that exponentially make it more complicated. Take a few examples:

Network Complexity: Data pipelines are actually not linear; they are multi-faceted networks of data flows. They can span multiple compute environments, touch several areas of responsibility and ownership, and cross over between batch and real-time processes. Orchestrating these pipelines requires a framework that coordinates across these complexities while ensuring flawless execution in the correct sequence.

Resource Optimization: Poorly orchestrated data pipelines can heavily consume computational resources in enterprises. Focusing on efficiency during orchestration can lead to significant savings. This includes dynamically allocating and scaling resources based on pipeline workloads, and avoiding unnecessary reruns of pipelines.

Operational Resilience: Data pipelines are subject to various points of failure, spanning data quality issues, interruptions in compute and network resources, and unexpected changes in the formats and volumes of data. Orchestration of data pipelines can provide resilience through these and many other discontinuities by incorporating features like proactive error detection, automated retry mechanisms, and pre-provisioned failover options to maintain uninterrupted data flow.

Dynamic Scalability: Many data pipelines experience fluctuating data volumes, not just day to day, but with seasons and operational events such as upstream application upgrades. Data pipeline orchestration can accommodate dynamic scaling specifically for these patterns, automatically adjusting computational resources to match the ebbs and flows of data traffic, ensuring efficiency regardless of load.

These types of complexities are daunting when approached from a general orchestration perspective. However, in the focused context of orchestrating data pipelines, a refined approach to the control over data movements and transformations becomes not only possible, but automatable (more on this below).

Key Components of Data Pipeline Orchestration

Given that data pipeline orchestration is a specific domain, let’s take a look at some of the primary technical components that should work in unison in order to streamline these data pipeline workflows.

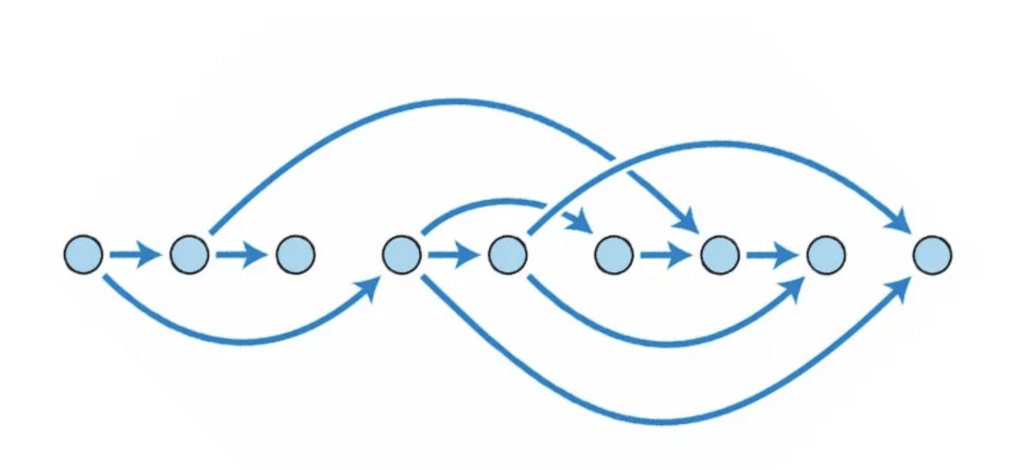

- Workflow Definition: This involves the specification of the pipeline’s business logic. It details the execution sequence of tasks, their dependencies, and the conditions under which they run. This is often expressed in a directed acyclic graph (DAG), where nodes represent tasks and edges define dependencies.

A general DAG schema. Source: Benoit Pimpaud

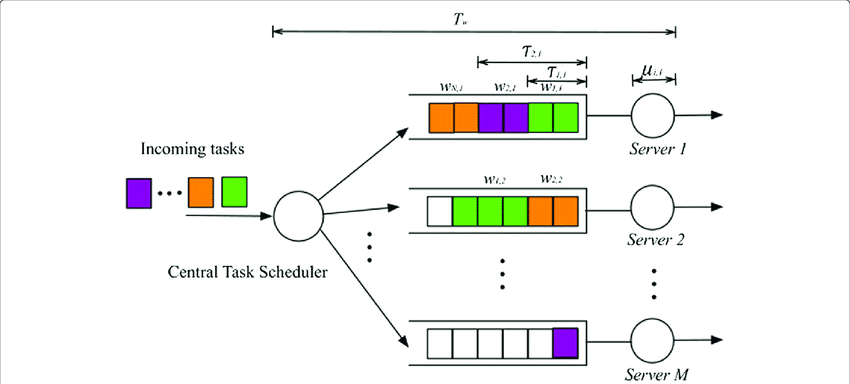

- Task Scheduling: Task scheduling is the temporal aspect of orchestration, determining when each task should actually be executed, with which datasets, and on which compute resources. Traditionally this can be based on time triggers, such as intervals or specific schedules, or based on event triggers, such as the completion of a preceding task.

Model of a general data center task scheduler. Source: Research Gate

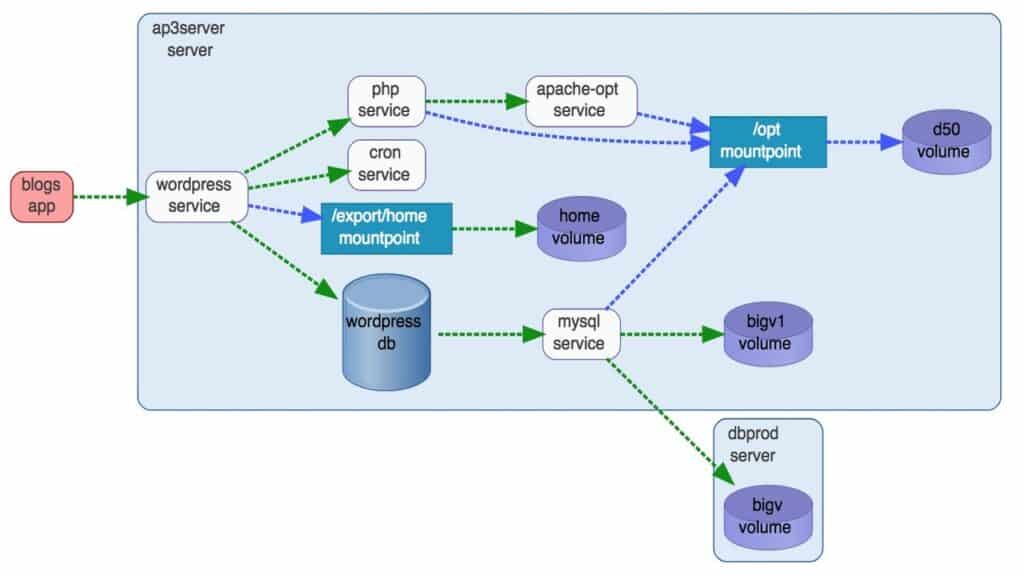

- Dependency Management: Dependency management ensures tasks are executed in an order that respects the interdependencies, including those defined in the workflow definition. This means the sequences in the task network commence for each task only once all its upstream dependencies have successfully completed.

Example of a general dependency management map. Source: Pathway Systems

Resource Management: Orchestration also involves the allocation and optimization of computational resources. Each task that is generated by the orchestration needs CPU, memory, and I/O capacity assigned and a compute engine designated to execute, while also leveraging “warm” resources and “packing” tasks into workloads that minimize contention and waste.

Error Handling and Recovery: Robust orchestration can handle task failures that arise from intermittent resources, unanticipated data structures, logic failures, and many other sources of error. Techniques for this include setting policies for retries, fallbacks, or alerts, and ensuring the system can recover from unexpected states.

Monitoring and Logging: Throughout the orchestrated workloads, continuous monitoring and detailed logging of each task’s performance and outcome are essential for maintaining operational visibility. This aids in debugging, performance tuning, and compliance with audit requirements.

These components are the backbone of data pipeline orchestration, working together to optimize the data flow, and ensuring that large-scale data processing is efficient, reliable, and scalable.

What are the challenges?

While data pipeline orchestration offers numerous benefits, it comes with its own set of challenges that organizations must navigate. Understanding these challenges is key for data engineering professionals to effectively design, implement, and maintain orchestration systems. Here are some common hurdles:

Complexity of Integrations: Modern data pipelines often interact with a diverse set of sources and destinations, including cloud-based services, on-premises databases, and third-party APIs. Orchestrating these pipelines requires a solution that can handle a multitude of integration points, which often involves complex configuration and maintenance.

Ensuring Data Quality: The orchestration system must ensure the integrity and quality of data throughout the pipeline. This involves implementing checks and balances to detect anomalies, ensure consistency, and prevent data loss, which can be particularly challenging when dealing with high volumes of data and real-time processing requirements.

Security and Compliance: As data pipelines process potentially sensitive information, orchestrating them in a way that complies with security policies and regulatory standards adds another layer of complexity. This includes ensuring data encryption, access controls, and audit trails are in place and functioning correctly.

Change Management: Data pipelines are not static and require updates to logic, transformations, and connections as business needs evolve. Executing change quickly, and managing these changes without disrupting service or data integrity can become challenging.

Skillset and Knowledge Requirements: Data pipeline orchestration requires a unique combination of skills, including data engineering, software development, and operations. Finding and cultivating talent that can navigate the technical and operational aspects of orchestration is an ongoing challenge.

Addressing these challenges requires a strategic approach that includes selecting the right tools, adopting data pipeline best practices, and continuously refining processes as technologies and requirements evolve.

Elevating Beyond Orchestration with Advanced Automation

As explained above, data pipelines generally adhere to predictable patterns, encompassing key components like workflow definition, task scheduling, dependency management, and error handling. This predictability is a gateway to unparalleled automation opportunities.

As we push the boundaries of what is possible, we begin to see orchestration as a fundamental, yet initial phase in the journey toward full-scale data pipeline automation. Essentially, this means that the running and management of your data pipelines doesn’t require additional code or manual intervention. Sophisticated data automation platforms, like Ascend, eliminate the need for data teams to entangle themselves in the intricacies of conditional branching, hard-coded schedules, and manual reruns.

Ascend’s intelligent data pipelines are capable of ensuring data integrity from source to sink, autonomously propagating changes, and eliminating unnecessary reprocessing. They offer the flexibility to be paused, inspected, and resumed at any point, coupled with the ability to provide comprehensive operational visibility in real-time.

In Ascend’s ecosystem, data pipelines transform into dynamic, self-aware entities. They are capable of self-optimization and self-healing, adapting in real-time to the fluctuating demands and volumes of data. This level of intelligent automation signifies a paradigm shift. Here, data pipelines are not merely orchestrated; they are intelligently automated.