Data represents our present and our future, and therein lies a significant problem: the more data you’re dealing with, the more challenging it will be to scale your company in a sustainable and standardized way.

We’re increasingly coming to realize that the rigid, monolithic architectures we’re currently using just don’t make the cut to store, organize, access, and use the ever-growing amounts of data. So, what’s the solution?

Enter the data mesh, a novel approach to data architecture that emphasizes decentralized data ownership, domain-driven data products, and self-service data infrastructure.

In this article, you’re going to learn the following:

- What a data mesh is

- Why it gained momentum

- The five core features of data mesh

- Why a company might consider building one

Let’s dive in!

What Is a Data Mesh?

Zhamak Dehghani first introduced the idea of the data mesh in 2019 as a better alternative to the monolithic data lake and its predecessor, the data warehouse.

Simply put, a data mesh is a platform architecture — a philosophy of sorts — that separates data into domains and defines the responsibilities of each. It provides a more distributed, decentralized, and resilient approach to data management.

The magic of a data mesh is that it combines a data product mindset with a distributed data modeling technique. The product mindset guides us to produce data products for customers and consumers. And the distributed architecture helps data engineering teams move faster. The data mesh becomes the key to building simpler, smaller, and shareable data sets in a scalable and secure environment.

Data Mesh Principles

In the conversation around data mesh, Zhamak Dehghani highlighted that there are four fundamental principles:

Business Data Domains

Data as a Product Mindset

Self-Serve Data Platform

Federated Computational Governance

1. Business Data Domains

A data mesh focuses on decentralization and distribution of responsibility to the individuals who are closest to the data. Thus, the data mesh follows the seams of organizational units. Business domains are good candidates for data ownership distribution because they support scalability and continuous change.

2. Data as a Product Mindset

Current data architectures face challenges in discovering, trusting, and using quality data, especially as the number of business domains increases. The data product concept addresses this challenge, treating data as a product and consumers as customers. You as the data product owner are responsible for ensuring that you deliver quality data with user satisfaction measures. Reusability heightens when the data consumer is getting what they need.

3. Self-Serve Data Platform

The self-serve data platform is a crucial component of a data mesh, allowing teams — domains — to autonomously own their data products by providing high-level abstractions of infrastructure that remove complexity and friction. As a critical element of the data mesh architecture, a self-serve data platform supports the agility, autonomy, and scale required to deliver your data products continuously.

Such platforms allow domain you to create, maintain, and run your data products with minimal friction and low operational overhead, resulting in faster development and deployment of data products, and enabling data-driven decision-making across your organization. The platform also provides standardized interfaces and tools to ensure consistency across data products and domains.

4. Federated Computational Governance

A data mesh focuses on building and deploying different data products by independent business domains. However, for these products to interoperate and generate value, there must be a governance model promoting decentralization and domain self-sovereignty. This model is known as federated computational governance, which creates global rules to ensure a healthy and interoperable ecosystem.

Unlike traditional data governance, which emphasizes centralization and global canonical representation of data, federated computational governance embraces change and multiple interpretive contexts. It aims to balance centralization and decentralization, allowing you to localize decisions to each domain while making global decisions to create interoperability and a compounding network effect through the discovery and composition of data products.

Why Has the Data Mesh Gained Momentum?

Now that you know a little more about data mesh architecture, let’s talk about why it’s picking up momentum.

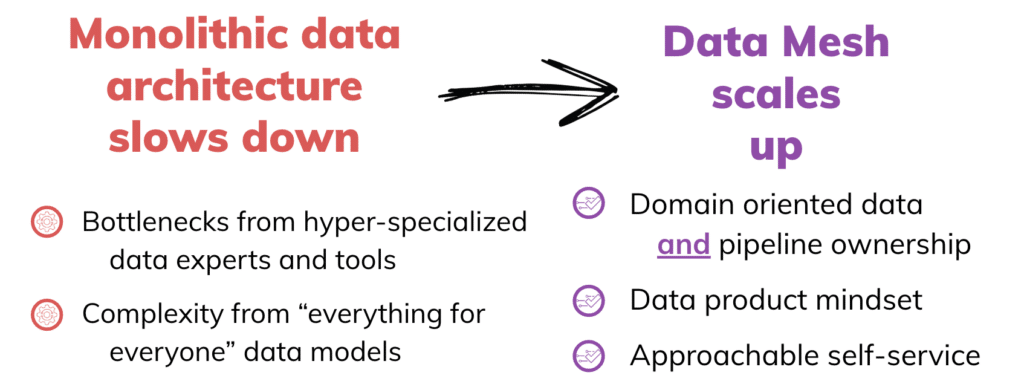

Most data platforms are monolithic. Data flows into centrally–owned data lakes or platforms. The pipeline architecture is very rigid and unforgiving and operates in an environment that highly specialized data engineers must manage.

Let’s run through a quick example. While working out, I love listening to music on Pandora (a streaming service). Think about all of the data that Pandora collects at any given moment. They have user-specific data — like the genres I gravitate toward, songs I give a “thumbs up” to, and songs I skip.

But that’s only the tip of the iceberg (not even).

Pandora is also collecting, storing, cleaning, and using data around the individual artists it features, ads it’s running, premium members, how long you listen to a specific song, and the list goes on and on.

A monolithic data platform stores all this data in one place. One group of engineers is responsible for all of it. Anytime anyone needs access to one bit of data, that data engineering team has to provide it. A marketing analyst may request access to advertising data to understand what ads offer a return on investment and which are a total flop. Meanwhile, the finance team might need data about premium members, which subscriptions are most popular, and which are most profitable.

Imagine how cumbersome it is to sift through this, especially as data grows!

Enormous (and growing) pools of data are the problem with monolithic platforms. Data ownership is domain-agnostic. You end up with a giant, centralized big data platform that is difficult to ingest, clean up, transform, and serve. This approach means that you will have one centralized team, with hyper-specialized tools and knowledge, that owns and manages all this data from multiple avenues, even though each avenue might be unique in its own right. These challenges only grow as you add more data to that platform.

How Is a Data Mesh Architecture the Solution?

Data meshes are a welcome evolution because they address a problem most of us have encountered: bottlenecks that keep us from getting work done. Monolithic data platforms can be incredibly slow, and the more they try to satisfy every use case for every type of business, the more complex and complicated they become. Furthermore, whereas IT artisans have historically managed data platforms in the cloud, a data mesh can scale up and out, take some of the burdens away from one core group of IT experts, and divide control into domain-specific groups of experts.

The more intelligent structure and organization of data meshes have made them a more approachable self-service tool, with speed and quality in mind, where data is domain-oriented, and pipeline ownership is more clearly maintained.

Let’s apply data mesh principles to our Pandora example: one domain stores user-specific data. Another stores programming data. Ads’ data is in yet another domain. And so on. Much more manageable, right? To be clear, this doesn’t mean that data will become fragmented and siloed, which would work against you. Instead, a data mesh sits between a centralized data platform and an excessively fragmented one.

The Five Core Features of Data Mesh

Now, you know what a data mesh is, but what exactly makes a data mesh architecture? Here are the five most critical components:

1. Discoverability and Shareability

A data mesh makes data easy for users to discover and share, which means they can more effortlessly consume data products from different domains and combine them with other data products or even external data.

There are different ways you can make data domain products discoverable and sharable. A spreadsheet might be enough for smaller domains, while more complex domains will likely publish their metadata, owners, origins, sample datasets, and schema to a central repository or catalog.

2. Addressability

In data mesh architecture, consumers are able to use domain data from the same location each time — a standard known as addressability. It’s also beneficial to extend this concept to governance processes like versioning, where you publish changes to data domains as new versions with new addresses. That way, consumers can decide which version of the domain they want to consume.

3. Usability

In a data mesh, you publish your domain data to make it more feasible to digest and use. This makes the self-service tool easier to learn and navigate.

One approach is to publish more widely used aggregate data alongside record-level detail, as two separate data products. This is particularly useful if the aggregate rules are complex.

4. Trustworthiness

Being able to control data to maintain its quality and consistency is an ongoing challenge for companies. While some resolve this by creating large governance structures to limit the scope of data products, this can slow things down considerably. A data mesh establishes trust with consumers because it prioritizes quality, usability, and documentation.

Building trust and reliability can happen in a number of ways. Namely, data domain owners should be responsible for cleansing the data they receive at its point of creation. You should also have ways of confirming the accuracy of the data — which is much more feasible in a data mesh architecture.

5. Security and Standardization

A data mesh architecture implements security measures in a consistent manner to avoid any gaps due to customization. It also uses standardization to improve the consumer experience across different data domains.

Standardization often stems from security. For example, providing role-based access control, along with masking and redaction, are critically important. Plus, standardization is good for the user experience because applying naming semantics, data type standards, and other general guidelines make the self-service tool easier to access and use.

Standardization becomes even more critical when we talk about needing the ability to collect, correlate, and analyze data from multiple domains.

Finally, standardization is essential for discoverability — one of the previous core concepts of data mesh architecture. Because different domains might maintain their datasets in different ways and formats (like CSV files versus database records), standardization means that even across very diverse data, you still have a consistent way of maintaining it, making it easier for consumers to find and use.

Why Build a Data Mesh?

We’ve already touched on some of these, but let’s do a quick recap!

- The distributed architecture is more scalable, faster, and secure than its monolithic counterpart.

- You can share the technology, with self-service data access and less complex data models. You accomplish this by establishing meaningfully bounded contexts.

- Data mesh allows you to align your architecture with your business goals.

- You clarify data ownership via specific business-oriented data domain owners.

- You can build from existing foundational data domains to reduce the time to availability for creating new data products.

Like most things in business (and life), transforming to a data mesh architecture has its challenges:

- You must decide what domain owns what data. Politics and competing business priorities can make it difficult to decide what the domains should be and who should manage them.

- A single, central publish/subscribe mechanism (otherwise known as the mesh experience layer) needs to be put in place where the domains publish their Data Products for the rest of the organization to discover and re-use.

- You have to create an execution model which can help enable less capable domains. For example, if the programming team at Pandora does not have the technical systems or skills in place to curate and publish programming data, the entire organization suffers.

- If data is spread out among many systems and/or requires significant engineering work to make usable, publishing domain data from them can require significant technical skills and tools in the domain.

- Migrating data from the current environment or legacy systems (with potentially decades of technical debt) to a data mesh can be a huge hurdle. Your company must decide if the payoff is worth the investment they’ll have to make.

Conclusion: A Glimpse at Easing Data Mesh Implementation

Data mesh presents an evolution in data architecture, offering a solution to the scalability issues that have plagued monolithic data platforms. This domain-oriented approach decentralizes data ownership, emphasizing the importance of data products and providing self-service data infrastructure. The rise of data meshes signals a pivotal shift in the data management landscape, offering organizations a path towards more efficient and effective use of their ever-expanding datasets.

However, it is important to remember that a successful data mesh implementation is no small feat. It involves careful decision-making in terms of data ownership, technical skill allocation, and the potential for significant initial investments, especially when migrating from legacy systems.

But, armed with data pipeline automation and cross-cloud data pipelines, this transition becomes much less daunting. With these in place, organizations can harness the full potential of the data mesh, resulting in a more agile and data-driven enterprise.